At AWS, our sales teams create customer-focused documents called account plans to deeply understand each AWS customer’s unique goals and challenges, helping account teams provide tailored guidance and support that accelerates customer success on AWS. As our business has expanded, the account planning process has become more intricate, requiring detailed analysis, reviews, and cross-team alignment to deliver meaningful value to customers. This complexity, combined with the manual review effort involved, has led to significant operational overhead. To address this challenge, we launched Account Plan Pulse in January 2025, a generative AI tool designed to streamline and enhance the account planning process. Implementing Pulse delivered a 37% improvement in plan quality year-over-year, while decreasing the overall time to complete, review, and approve plans by 52%.

In this post, we share how we built Pulse using Amazon Bedrock to reduce review time and provide actionable account plan summaries for ease of collaboration and consumption, helping AWS sales teams better serve our customers. Amazon Bedrock is a comprehensive, secure, and flexible service for building generative AI applications and agents. It connects you to leading foundation models (FMs), services to deploy and operate agents, and tools for fine-tuning, safeguarding, and optimizing models, along with knowledge bases to connect applications to your latest data so that you have everything you need to quickly move from experimentation to real-world deployment.

Challenges with increasing scale and complexity

As AWS continued to grow and evolve, our account planning processes needed to adapt to meet increasing scale and complexity. Before enterprise-ready large language models (LLMs) became available through Amazon Bedrock, we explored rule-based document processing to evaluate account plans, which proved inadequate for handling nuanced content and growing document volumes. By 2024, three critical challenges had emerged:

- Disparate plan quality and format – With teams operating across numerous AWS Regions and serving customers in diverse industries, account plans naturally developed variations in structure, detail, and format. This inconsistency made it difficult to make sure critical customer needs were described effectively and consistently. Additionally, the evaluation of account plan quality was inherently subjective, relying heavily on human judgment to assess each plan’s depth, strategic alignment, and customer focus.

- Resource-intensive review process – The quality assessment process relied on manual reviews by sales leadership. Though thorough, these reviews consumed valuable time that could otherwise be devoted to strategic customer engagements. As our business scaled, this approach created bottlenecks in plan approval and implementation.

- Knowledge silos – We identified untapped potential for cross-team collaboration. Developing methods to extract and share knowledge would transform individual account plans into collective best practices to better serve our customers.

Solution overview

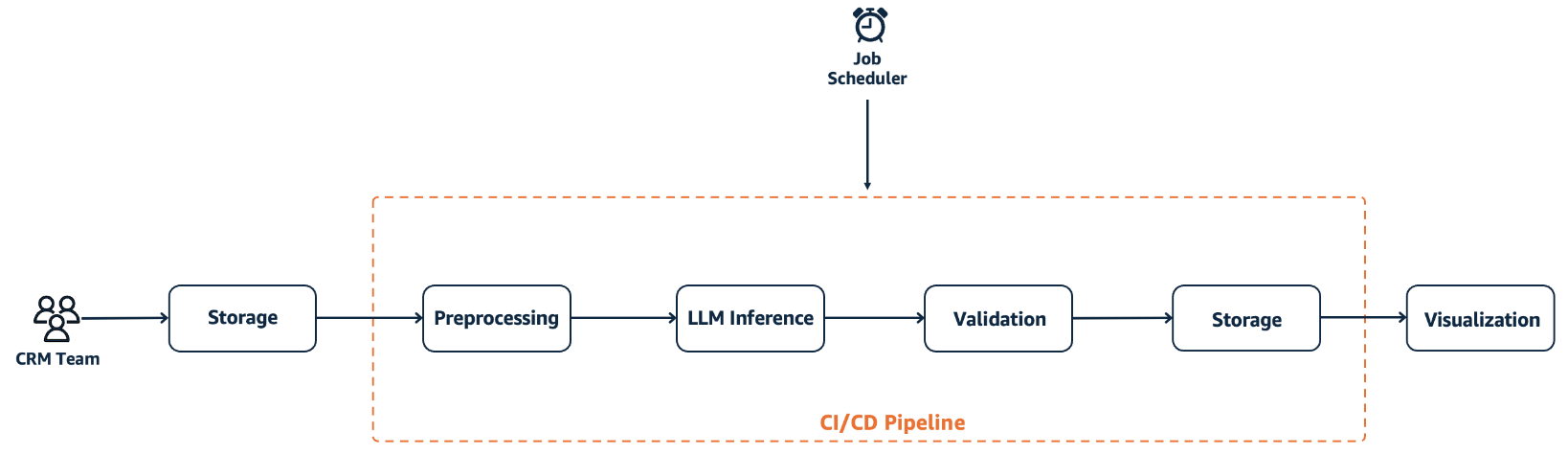

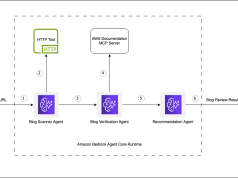

To address these challenges, we designed Pulse, a generative AI solution that uses Amazon Bedrock to analyze and improve account plans. The following diagram illustrates the solution workflow.

The workflow consists of the following steps:

- Account plan narrative content is pulled from our CRM system on a scheduled basis through an asynchronous batch processing pipeline.

- The data flows through a series of processing stages:

- Preprocessing to structure and normalize the data and generate metadata.

- LLM inference to analyze content and generate insights.

- Validation to confirm quality and compliance.

- Results are stored securely for reporting and dashboard visualization.

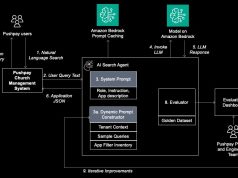

We’ve integrated Pulse directly with existing sales workflows to maximize user adoption and have established feedback loops that continuously refine performance. The following diagram shows the solution architecture.

In the following sections, we explore the key components of the solution in more detail.

Ingestion

We implement a batch processing pipeline that extracts account plans from our CRM system into Amazon Simple Storage Service (Amazon S3) buckets. A scheduler triggers this pipeline on a regular cadence, facilitating continuous analysis of the most current information.

Preprocessing

Considering the dynamic nature of account plans, they are processed in daily snapshots, with only updated plans included in each run. Preprocessing is conducted at two layers: an extract, transform, and load (ETL) flow layer to organize required files to be processed, and just before model calls as part of input validation. This approach, using the plan’s last modified date, is crucial for avoiding multiple runs on the same content. The preprocessing pipeline handles the daily scheduled job that reads account plan data stored as Parquet files in Amazon S3, extracts text content from HTML fields, and generates structured metadata for each document. To optimize processing efficiency, the system compares document timestamps to process only recently modified plans, significantly reducing computational overhead and costs. The processed text content and metadata are then transformed into a standardized format and stored back to Amazon S3 as Parquet files, creating a clean dataset ready for LLM analysis.

Analysis with Amazon Bedrock

The core of our solution uses Amazon Bedrock, which provides a variety of model choices and control, data customization, safety and guardrails, cost optimization, and orchestration. We use the Amazon Bedrock FMs to perform two key functions:

- Account plan evaluation – Pulse evaluates plans against 10 business-critical categories, creating a standardized Account Plan Readiness Index. This automated evaluation identifies improvement areas with specific improvement recommendations.

- Actionable insights – Amazon Bedrock extracts and synthesizes patterns across plans, identifying customer strategic focus and market trends that might otherwise remain isolated in individual documents.

We implement these capabilities through asynchronous batch processing, where evaluation and summarization workloads operate independently. The evaluation process runs each account through 27 specific questions with tailored control prompts, and the summarization process generates topical overviews for straightforward consumption and knowledge sharing.

For this implementation, we use structured output prompting with schema constraints to provide consistent formatting that integrates with our reporting tools.

Validation

Our validation framework includes the following components:

- Input and output validations are critical as part of the OWASP Top 10 for Large Language Model Applications. The input validation is essential by the introduction of necessary guardrails and prompt validation, and the output validation makes sure the results are structured and constrained to expected responses.

- Automated quality and compliance checks against established business rules.

- Additional review for outputs that don’t meet quality thresholds.

- A feedback mechanism that improves system accuracy over time.

Storage and visualization

The solution includes the following storage and visualization components:

- Amazon S3 provides secure storage for all processed account plans and insights.

- A daily run cadence refreshes insight and enables progress tracking.

- Interactive dashboards offer both executive summaries and detailed plan views.

Engineering for production: Building reliable AI evaluations

When transitioning Pulse from prototype to production, we implemented a robust engineering framework to address three critical AI-specific challenges. First, the non-deterministic nature of LLMs meant identical inputs could produce varying outputs, potentially compromising evaluation consistency. Second, account plans naturally evolve throughout the year with customer relationships, making static evaluation methods insufficient. Third, different AWS teams prioritize different aspects of account plans based on specific customer industry and business needs, requiring flexible evaluation criteria. To maintain evaluation reliability, we developed a statistical framework using Coefficient of Variation (CoV) analysis across multiple model runs on account plan inputs. The goal is to use the CoV as a correction factor to address the data dispersion, which we achieved by calculating the overall CoV at the evaluated question level. With this approach, we can scientifically measure and stabilize output variability, establish clear thresholds for selective manual reviews, and detect performance shifts requiring recalibration. Account plans falling within confidence thresholds proceed automatically in the system, and those outside established thresholds are flagged for manual review. We complemented this with a dynamic threshold weighting system that aligns evaluations with organizational priorities by assigning different weights to criteria based on business impact. This customizes thresholds across different account types—for example, applying different evaluation parameters to enterprise accounts versus mid-market accounts. These business thresholds undergo periodic review with sales leadership and adjustment based on feedback, so our AI evaluations remain relevant while maintaining quality and saving valuable time.

Conclusion

In this post, we shared how Pulse, powered by Amazon Bedrock, has transformed the account planning process for AWS sales teams. Through automated reviews and structured validation, Pulse streamlines quality assessments and breaks down knowledge silos by surfacing actionable customer intelligence across our global organization. This helps our sales teams spend less time on reviews and more time making data-driven decisions for strategic customer engagements.

Looking ahead, we’re excited to enhance Pulse’s capabilities to measure account plan execution by connecting strategic planning with sales activities and customer outcomes. By analyzing account plan narratives, we aim to identify and act on new opportunities, creating deeper insights into how strategic planning drives customer success on AWS.

We aim to continue to use the new capabilities of Amazon Bedrock for enhanced and robust improvements to our processes. By building flows for orchestrating our workflows, use of Amazon Bedrock Guardrails, introduction of agentic frameworks, and use of Strands Agents and Amazon Bedrock AgentCore, we can make a more dynamic flow in the future.

To learn more about Amazon Bedrock, refer to the Amazon Bedrock User Guide, Amazon Bedrock Workshop: AWS Code Samples, AWS Workshops, and Using generative AI on AWS for diverse content types. For the latest news on AWS, see What’s New with AWS?

About the authors

Karnika Sharma is a Senior Product Manager in the AWS Sales, Marketing, and Global Services (SMGS) org, where she works on empowering the global sales organization to accelerate customer growth with AWS. She’s passionate about bridging machine learning and AI innovation with real-world impact, building solutions that serve both business goals and broader societal needs. Outside of work, she finds joy in plein air sketching, biking, board games, and traveling.

Karnika Sharma is a Senior Product Manager in the AWS Sales, Marketing, and Global Services (SMGS) org, where she works on empowering the global sales organization to accelerate customer growth with AWS. She’s passionate about bridging machine learning and AI innovation with real-world impact, building solutions that serve both business goals and broader societal needs. Outside of work, she finds joy in plein air sketching, biking, board games, and traveling.

Dayo Oguntoyinbo is a Sr. Data Scientist with the AWS Sales, Marketing, and Global Services (SMGS) Organization. He helps both AWS internal teams and external customers take advantage of the power of AI/ML technologies and solutions. Dayo brings over 12 years of cross-industry experience. He specializes in reproducible and full-lifecycle AI/ML, including generative AI solutions, with a focus on delivering measurable business impacts. He has MSc. (Tech) in Communication Engineering. Dayo is passionate about advancing generative AI/ML technologies to drive real-world impact.

Dayo Oguntoyinbo is a Sr. Data Scientist with the AWS Sales, Marketing, and Global Services (SMGS) Organization. He helps both AWS internal teams and external customers take advantage of the power of AI/ML technologies and solutions. Dayo brings over 12 years of cross-industry experience. He specializes in reproducible and full-lifecycle AI/ML, including generative AI solutions, with a focus on delivering measurable business impacts. He has MSc. (Tech) in Communication Engineering. Dayo is passionate about advancing generative AI/ML technologies to drive real-world impact.

Mihir Gadgil is a Senior Data Engineer in the AWS Sales, Marketing, and Global Services (SMGS) org, specializing in enterprise-scale data solutions and generative AI applications. With 9+ years of experience and a Master’s in Information Technology & Management, he focuses on building robust data pipelines, complex data modeling, and ETL/ELT processes. His expertise drives business transformation through innovative data engineering solutions, advanced analytics capabilities.

Mihir Gadgil is a Senior Data Engineer in the AWS Sales, Marketing, and Global Services (SMGS) org, specializing in enterprise-scale data solutions and generative AI applications. With 9+ years of experience and a Master’s in Information Technology & Management, he focuses on building robust data pipelines, complex data modeling, and ETL/ELT processes. His expertise drives business transformation through innovative data engineering solutions, advanced analytics capabilities.

Carlos Chinchilla is a Solutions Architect at Amazon Web Services (AWS), where he works with customers across EMEA to implement AI and machine learning solutions. With a background in telecommunications engineering from the Technical University of Madrid, he focuses on building AI-powered applications using both open source frameworks and AWS services. His work includes developing AI assistants, machine learning pipelines, and helping organizations use cloud technologies for innovation.

Carlos Chinchilla is a Solutions Architect at Amazon Web Services (AWS), where he works with customers across EMEA to implement AI and machine learning solutions. With a background in telecommunications engineering from the Technical University of Madrid, he focuses on building AI-powered applications using both open source frameworks and AWS services. His work includes developing AI assistants, machine learning pipelines, and helping organizations use cloud technologies for innovation.

Sofian Hamiti is a technology leader with over 10 years of experience building AI solutions, and leading high-performing teams to maximize customer outcomes. He is passionate in empowering diverse talent to drive global impact and achieve their career aspirations.

Sofian Hamiti is a technology leader with over 10 years of experience building AI solutions, and leading high-performing teams to maximize customer outcomes. He is passionate in empowering diverse talent to drive global impact and achieve their career aspirations.

Sujit Narapareddy, Head of Data & Analytics at AWS Global Sales, is a technology leader driving global enterprise transformation. He leads data product and platform teams that power AWS’s Go-to-Market through AI-augmented analytics and intelligent automation. With a proven track record in enterprise solutions, he has transformed sales productivity, data governance, and operational excellence. Previously at JPMorgan Chase Business Banking, he shaped next-generation FinTech capabilities through data innovation.

Sujit Narapareddy, Head of Data & Analytics at AWS Global Sales, is a technology leader driving global enterprise transformation. He leads data product and platform teams that power AWS’s Go-to-Market through AI-augmented analytics and intelligent automation. With a proven track record in enterprise solutions, he has transformed sales productivity, data governance, and operational excellence. Previously at JPMorgan Chase Business Banking, he shaped next-generation FinTech capabilities through data innovation.