Organizations face significant challenges in making their recruitment processes more efficient while maintaining fair hiring practices. By using AI to transform their recruitment and talent acquisition processes, organizations can overcome these challenges. AWS offers a suite of AI services that can be used to significantly enhance the efficiency, effectiveness, and fairness of hiring practices. With AWS AI services, specifically Amazon Bedrock, you can build an efficient and scalable recruitment system that streamlines hiring processes, helping human reviewers focus on the interview and assessment of candidates.

In this post, we show how to create an AI-powered recruitment system using Amazon Bedrock, Amazon Bedrock Knowledge Bases, AWS Lambda, and other AWS services to enhance job description creation, candidate communication, and interview preparation while maintaining human oversight.

The AI-powered recruitment lifecycle

The recruitment process presents numerous opportunities for AI enhancement through specialized agents, each powered by Amazon Bedrock and connected to dedicated Amazon Bedrock knowledge bases. Let’s explore how these agents work together across key stages of the recruitment lifecycle.

Job description creation and optimization

Creating inclusive and attractive job descriptions is crucial for attracting diverse talent pools. The Job Description Creation and Optimization Agent uses advanced language models available in Amazon Bedrock and connects to an Amazon Bedrock knowledge base containing your organization’s historical job descriptions and inclusion guidelines.

Deploy the Job Description Agent with a secure Amazon Virtual Private Cloud (Amazon VPC) configuration and AWS Identity and Access Management (IAM) roles. The agent references your knowledge base to optimize job postings while maintaining compliance with organizational standards and inclusive language requirements.

Candidate communication management

The Candidate Communication Agent manages candidate interactions through the following components:

- Lambda functions that trigger communications based on workflow stages

- Amazon Simple Notification Service (Amazon SNS) for secure email and text delivery

- Integration with approval workflows for regulated communications

- Automated status updates based on candidate progression

Configure the Communication Agent with proper VPC endpoints and encryption for all data in transit and at rest. Use Amazon CloudWatch monitoring to track communication effectiveness and response rates.

Interview preparation and feedback

The Interview Prep Agent supports the interview process by:

- Accessing a knowledge base containing interview questions, SOPs, and best practices

- Generating contextual interview materials based on role requirements

- Analyzing interviewer feedback and notes using Amazon Bedrock to identify key sentiments and consistent themes across evaluations

- Maintaining compliance with interview standards stored in the knowledge base

Although the agent provides interview structure and guidance, interviewers maintain full control over the conversation and evaluation process.

Solution overview

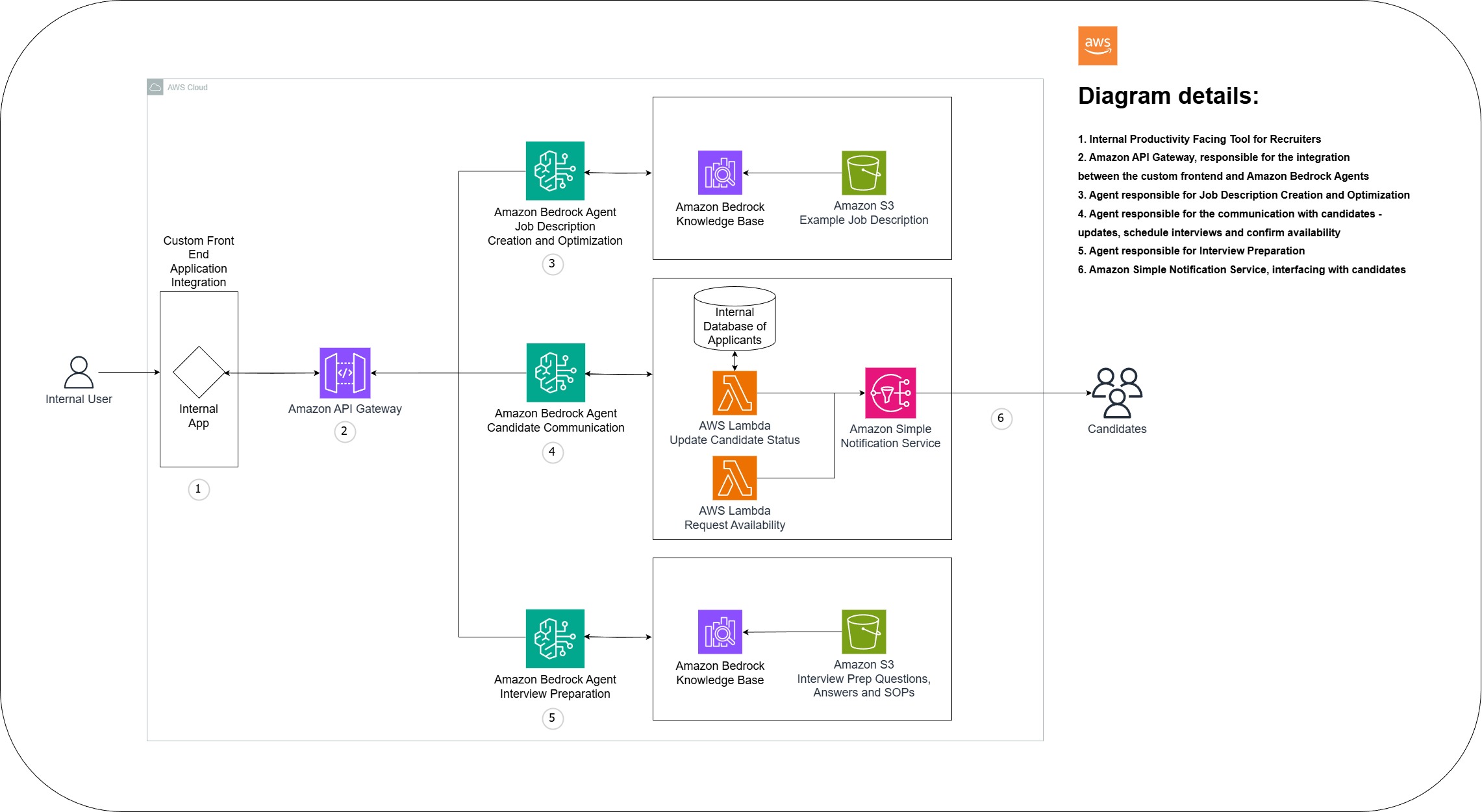

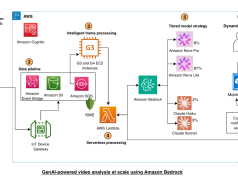

The architecture brings together the recruitment agents and AWS services into a comprehensive recruitment system that enhances and streamlines the hiring process.The following diagram shows how three specialized AI agents work together to manage different aspects of the recruitment process, from job posting creation through summarizing interview feedback. Each agent uses Amazon Bedrock and connects to dedicated Amazon Bedrock knowledge bases while maintaining security and compliance requirements.

The solution consists of three main components working together to improve the recruitment process:

- Job Description Creation and Optimization Agent – The Job Description Creation and Optimization Agent uses the AI capabilities of Amazon Bedrock to create and refine job postings, connecting directly to an Amazon Bedrock knowledge base that contains example descriptions and best practices for inclusive language.

- Candidate Communication Agent – For candidate communications, the dedicated agent streamlines interactions through an automated system. It uses Lambda functions to manage communication workflows and Amazon SNS for reliable message delivery. The agent maintains direct connections with candidates while making sure communications follow approved templates and procedures.

- Interview Prep Agent – The Interview Prep Agent serves as a comprehensive resource for interviewers, providing guidance on interview formats and questions while helping structure, summarize, and analyze feedback. It maintains access to a detailed knowledge base of interview standards and uses the natural language processing capabilities of Amazon Bedrock to analyze interview feedback patterns and themes, helping maintain consistent evaluation practices across hiring teams.

Prerequisites

Before implementing this AI-powered recruitment system, make sure you have the following:

- AWS account and access:

- An AWS account with administrator access

- Access to Amazon Bedrock foundation models (FMs)

- Permissions to create and manage IAM roles and policies

- AWS services required:

- Amazon API Gateway

- Amazon Bedrock with access to FMs

- Amazon Bedrock Knowledge Bases

- Amazon CloudWatch

- AWS Key Management Service (AWS KMS)

- AWS Lambda

- Amazon SNS

- Amazon Simple Storage Service (Amazon S3) for knowledge base storage

- Amazon VPC

- Technical requirements:

- Basic knowledge of Python 3.9 or later (for Lambda functions)

- Network access to configure VPC endpoints

- Security and compliance:

- Understanding of AWS security best practices

- SSL/TLS certificates for secure communications

- Compliance approval from your organization’s security team

In the following sections, we examine the key components that make up our AI-powered recruitment system. Each piece plays a crucial role in creating a secure, scalable, and effective solution. We start with the infrastructure definition and work our way through the deployment, knowledge base integration, core AI agents, and testing tools.

Infrastructure as code

The following AWS CloudFormation template defines the complete AWS infrastructure, including VPC configuration, security groups, Lambda functions, API Gateway, and knowledge bases. It facilities secure, scalable deployment with proper IAM roles and encryption.

Deployment automation

The following automation script handles deployment of the recruitment system infrastructure and Lambda functions. It manages CloudFormation stack creation and updates and Lambda function code updates, making system deployment and updates streamlined and consistent.

Knowledge base integration

The central knowledge base manager interfaces with Amazon Bedrock knowledge base collections to provide best practices, templates, and standards to the recruitment agents. It enables AI agents to make informed decisions based on organizational knowledge.

To improve Retrieval Augmented Generation (RAG) quality, start by tuning your Amazon Bedrock knowledge bases. Adjust chunk sizes and overlap for your documents, experiment with different embedding models, and enable reranking to promote the most relevant passages. For each agent, you can also choose different foundation models. For example, use a fast model such as Anthropic’s Claude 3 Haiku for high-volume job description and communication tasks, and a more capable model such as Anthropic’s Claude 3 Sonnet or another reasoning-optimized model for the Interview Prep Agent, where deeper analysis is required. Capture these experiments as part of your continuous improvement process so you can standardize on the best-performing configurations.

The core AI agents

The integration between the three agents is handled through API Gateway and Lambda, with each agent exposed through its own endpoint. The system uses three specialized AI agents.

Job Description Agent

This agent is the first step in the recruitment pipeline. It uses Amazon Bedrock to create inclusive and effective job descriptions by combining requirements with best practices from the knowledge base.

Communication Agent

This agent manages candidate communications throughout the recruitment process. It integrates with Amazon SNS for notifications and provides professional, consistent messaging using approved templates.

Interview Prep Agent

This agent prepares tailored interview materials and questions based on the role and candidate background. It helps maintain consistent interview standards while adapting to specific positions.

Testing and verification

The following test client demonstrates interaction with the recruitment system API. It provides example usage of major functions and helps verify system functionality.

During testing, track both qualitative and quantitative results. For example, measure recruiter satisfaction with generated job descriptions, response rates to candidate communications, and interviewers’ feedback on the usefulness of prep materials. Use these metrics to refine prompts, knowledge base contents, and model choices over time.

Clean up

To avoid ongoing charges when you’re done testing or if you want to tear down this solution, follow these steps in order:

- Delete Lambda resources:

- Delete all functions created for the agents.

- Remove associated CloudWatch log groups.

- Delete API Gateway endpoints:

- Delete the API configurations.

- Remove any custom domain names.

- Delete all collections.

- Remove any custom policies.

- Wait for collections to be fully deleted before continuing to the next steps.

- Delete SNS topics

- Delete all topics created for communications.

- Remove any subscriptions.

- Delete VPC resources:

- Remove VPC endpoints.

- Delete security groups.

- Delete the VPC if it was created specifically for this solution.

- Clean up IAM resources:

- Delete IAM roles created for the solution.

- Remove any associated policies.

- Delete service-linked roles if no longer needed.

- Delete KMS keys:

- Schedule key deletion for unused KMS keys (keep keys if they’re used by other applications).

- Delete CloudWatch resources:

- Delete dashboards.

- Delete alarms.

- Delete any custom metrics.

- Clean up S3 buckets:

- Empty buckets used for knowledge bases.

- Delete the buckets.

- Delete the Amazon Bedrock knowledge base.

After cleanup, take these steps to verify all charges are stopped:

- Check your AWS bill for the next billing cycle

- Verify all services have been properly terminated

- Contact AWS Support if you notice any unexpected charges

Document the resources you’ve created and use this list as a checklist during cleanup to make sure you don’t miss any components that could continue to generate charges.

Implementing AI in recruitment: Best practices

To successfully implement AI in recruitment while maintaining ethical standards and human oversight, consider these essential practices.

Security, compliance, and infrastructure

The security implementation should follow a comprehensive approach to protect all aspects of the recruitment system. The solution deploys within a properly configured VPC with carefully defined security groups. All data, whether at rest or in transit, should be protected through AWS KMS encryption, and IAM roles are implemented following strict least privilege principles. The system maintains complete visibility through CloudWatch monitoring and audit logging, with secure API Gateway endpoints managing external communications. To protect sensitive information, implement data tokenization for personally identifiable information (PII) and maintain strict data retention policies. Regular privacy impact assessments and documented incident response procedures support ongoing security compliance.Consider the implementation of Amazon Bedrock Guardrails to provide granular control over AI model outputs, helping you enforce consistent safety and compliance standards across your AI applications. By implementing rule-based filters and boundaries, teams can prevent inappropriate content, maintain professional communication standards, and make sure responses align with their organization’s policies. You can configure guardrails at multiple levels—from individual agents to organization-wide implementations—with customizable controls for content filtering, topic restrictions, and response parameters. This systematic approach helps organizations mitigate risks while using AI capabilities, particularly in regulated industries or customer-facing applications where maintaining appropriate, unbiased, and safe interactions is crucial.

Knowledge base architecture and management

The knowledge base architecture should follow a hub-and-spoke model centered around a core repository of organizational knowledge. This central hub maintains essential information including company values, policies, and requirements, along with shared reference data used across the agents. Version control and backup procedures maintain data integrity and availability.Surrounding this central hub, specialized knowledge bases serve each agent’s unique needs. The Job Description Agent accesses writing guidelines and inclusion requirements. The Communication Agent draws from approved message templates and workflow definitions, and the Interview Prep Agent uses comprehensive question banks and evaluation criteria.

System integration and workflows

Successful system operation relies on robust integration practices and clearly defined workflows. Error handling and retry mechanisms facilitate reliable operation, and clear handoff points between agents maintain process integrity. The system should maintain detailed documentation of dependencies and data flows, with circuit breakers protecting against cascade failures. Regular testing through automated frameworks and end-to-end workflow validation supports consistent performance and reliability.

Human oversight and governance

The AI-powered recruitment system should prioritize human oversight and governance to promote ethical and fair practices. Establish mandatory review checkpoints throughout the process where human recruiters assess AI recommendations and make final decisions. To handle exceptional cases, create clear escalation paths that allow for human intervention when needed. Sensitive actions, such as final candidate selections or offer approvals, should be subject to multi-level human approval workflows.To maintain high standards, continuously monitor decision quality and accuracy, comparing AI recommendations with human decisions to identify areas for improvement. The team should undergo regular training programs to stay updated on the system’s capabilities and limitations, making sure they can effectively oversee and complement the AI’s work. Document clear override procedures, so recruiters can adjust or override AI decisions when necessary. Regular compliance training for team members reinforces the commitment to ethical AI use in recruitment.

Performance and cost management

To optimize system efficiency and manage costs effectively, implement a multi-faceted approach. Automatic scaling for Lambda functions makes sure the system can handle varying workloads without unnecessary resource allocation. For predictable workloads, use AWS Savings Plans to reduce costs without sacrificing performance. You can estimate the solution costs using the AWS Pricing Calculator, which helps plan for services like Amazon Bedrock, Lambda, and Amazon Bedrock Knowledge Bases.

Comprehensive CloudWatch dashboards provide real-time visibility into system performance, facilitating quick identification and addressing of issues. Establish performance baselines and regularly monitor against these to detect deviations or areas for improvement. Cost allocation tags help track expenses across different departments or projects, enabling more accurate budgeting and resource allocation.

To avoid unexpected costs, configure budget alerts that notify the team when spending approaches predefined thresholds. Regular capacity planning reviews make sure the infrastructure keeps pace with organizational growth and changing recruitment needs.

Continuous improvement framework

Commitment to excellence should be reflected in a continuous improvement framework. Conduct regular metric reviews and gather stakeholder feedback to identify areas for enhancement. A/B testing of new features or process changes allows for data-driven decisions about improvements. Maintain a comprehensive system of documentation, capturing lessons learned from each iteration or challenge encountered. This knowledge informs ongoing training data updates, making sure AI models remain current and effective. The improvement cycle should include regular system optimization, where algorithms are fine-tuned, knowledge bases updated, and workflows refined based on performance data and user feedback. Closely analyze performance trends over time, allowing proactive addressing of potential issues and capitalization on successful strategies. Stakeholder satisfaction should be a key metric in the improvement framework. Regularly gather feedback from recruiters, hiring managers, and candidates to verify if the AI-powered system meets the needs of all parties involved in the recruitment process.

Solution evolution and agent orchestration

As AI implementations mature and organizations develop multiple specialized agents, the need for sophisticated orchestration becomes critical. Amazon Bedrock AgentCore provides the foundation for managing this evolution, facilitating seamless coordination and communication between agents while maintaining centralized control. This orchestration layer streamlines the management of complex workflows, optimizes resource allocation, and supports efficient task routing based on agent capabilities. By implementing Amazon Bedrock AgentCore as part of your solution architecture, organizations can scale their AI operations smoothly, maintain governance standards, and support increasingly complex use cases that require collaboration between multiple specialized agents. This systematic approach to agent orchestration helps future-proof your AI infrastructure while maximizing the value of your agent-based solutions.

Conclusion

AWS AI services offer specific capabilities that can be used to transform recruitment and talent acquisition processes. By using these services and maintaining a strong focus on human oversight, organizations can create more efficient, fair, and effective hiring practices. The goal of AI in recruitment is not to replace human decision-making, but to augment and support it, helping HR professionals focus on the most valuable aspects of their roles: building relationships, assessing cultural fit, and making nuanced decisions that impact people’s careers and organizational success. As you embark on your AI-powered recruitment journey, start small, focus on tangible improvements, and keep the candidate and employee experience at the forefront of your efforts. With the right approach, AI can help you build a more diverse, skilled, and engaged workforce, driving your organization’s success in the long term.

For more information about AI-powered solutions on AWS, refer to the following resources:

About the Authors

Dola Adesanya is a Customer Solutions Manager at Amazon Web Services (AWS), where she leads high-impact programs across customer success, cloud transformation, and AI-driven system delivery. With a unique blend of business strategy and organizational psychology expertise, she specializes in turning complex challenges into actionable solutions. Dola brings extensive experience in scaling programs and delivering measurable business outcomes.

Dola Adesanya is a Customer Solutions Manager at Amazon Web Services (AWS), where she leads high-impact programs across customer success, cloud transformation, and AI-driven system delivery. With a unique blend of business strategy and organizational psychology expertise, she specializes in turning complex challenges into actionable solutions. Dola brings extensive experience in scaling programs and delivering measurable business outcomes.

RonHayman leads Customer Solutions for US Enterprise and Software Internet & Foundation Models at Amazon Web Services (AWS). His organization helps customers migrate infrastructure, modernize applications, and implement generative AI solutions. Over his 20-year career as a global technology executive, Ron has built and scaled cloud, security, and customer success teams. He combines deep technical expertise with a proven track record of developing leaders, organizing teams, and delivering customer outcomes.

RonHayman leads Customer Solutions for US Enterprise and Software Internet & Foundation Models at Amazon Web Services (AWS). His organization helps customers migrate infrastructure, modernize applications, and implement generative AI solutions. Over his 20-year career as a global technology executive, Ron has built and scaled cloud, security, and customer success teams. He combines deep technical expertise with a proven track record of developing leaders, organizing teams, and delivering customer outcomes.

Achilles Figueiredo is a Senior Solutions Architect at Amazon Web Services (AWS), where he designs and implements enterprise-scale cloud architectures. As a trusted technical advisor, he helps organizations navigate complex digital transformations while implementing innovative cloud solutions. He actively contributes to AWS’s technical advancement through AI, Security, and Resilience initiatives and serves as a key resource for both strategic planning and hands-on implementation guidance.

Achilles Figueiredo is a Senior Solutions Architect at Amazon Web Services (AWS), where he designs and implements enterprise-scale cloud architectures. As a trusted technical advisor, he helps organizations navigate complex digital transformations while implementing innovative cloud solutions. He actively contributes to AWS’s technical advancement through AI, Security, and Resilience initiatives and serves as a key resource for both strategic planning and hands-on implementation guidance.

Sai Jeedigunta is a Sr. Customer Solutions Manager at AWS. He is passionate about partnering with executives and cross-functional teams in driving cloud transformation initiatives and helping them realize the benefits of cloud. He has over 20 years of experience in leading IT infrastructure engagements for fortune enterprises.

Sai Jeedigunta is a Sr. Customer Solutions Manager at AWS. He is passionate about partnering with executives and cross-functional teams in driving cloud transformation initiatives and helping them realize the benefits of cloud. He has over 20 years of experience in leading IT infrastructure engagements for fortune enterprises.