GenAI News

Scale AI in South Africa using Amazon Bedrock global cross-Region inference...

Building AI applications with Amazon Bedrock presents throughput challenges impacting the scalability of your applications. Global cross-Region inference in the af-south-1 AWS Region changes that. You...

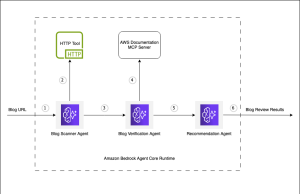

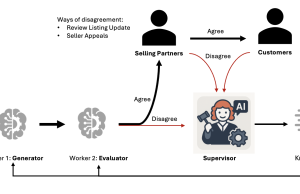

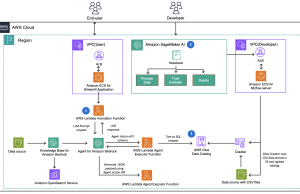

Scaling content review operations with multi-agent workflow

Enterprises are managing ever-growing volumes of content, ranging from product catalogs and support articles to knowledge bases and technical documentation. Ensuring this information remains...

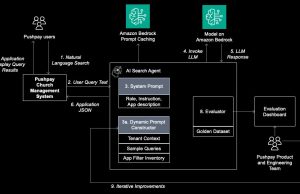

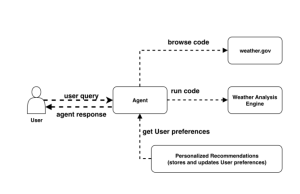

Build reliable Agentic AI solution with Amazon Bedrock: Learn from Pushpay’s...

This post was co-written with Saurabh Gupta and Todd Colby from Pushpay.

Pushpay is a market-leading digital giving and engagement platform designed to help churches and faith-based...

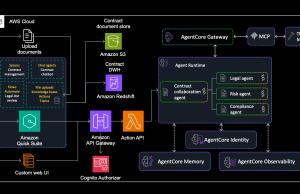

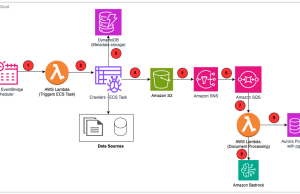

Build an intelligent contract management solution with Amazon Quick Suite and...

Organizations managing hundreds of contracts annually face significant inefficiencies, with fragmented systems and complex workflows that require teams to spend hours on contract review...

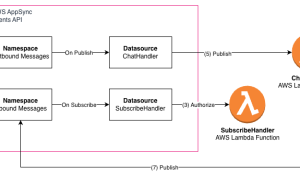

Build a serverless AI Gateway architecture with AWS AppSync Events

AWS AppSync Events can help you create more secure, scalable Websocket APIs. In addition to broadcasting real-time events to millions of Websocket subscribers, it...

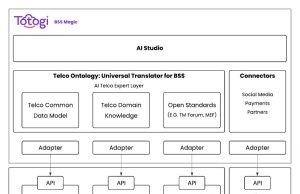

How Totogi automated change request processing with Totogi BSS Magic and...

This post is cowritten by Nikhil Mathugar, Marc Breslow and Sudhanshu Sinha from Totogi.

This blog post describes how Totogi automates change request processing. Totogi...

How the Amazon.com Catalog Team built self-learning generative AI at scale...

The Amazon.com Catalog is the foundation of every customer’s shopping experience—the definitive source of product information with attributes that power search, recommendations, and discovery....

Build AI agents with Amazon Bedrock AgentCore using AWS CloudFormation

Agentic-AI has become essential for deploying production-ready AI applications, yet many developers struggle with the complexity of manually configuring agent infrastructure across multiple environments....

How CLICKFORCE accelerates data-driven advertising with Amazon Bedrock Agents

CLICKFORCE is one of leaders in digital advertising services in Taiwan, specializing in data-driven advertising and conversion (D4A – Data for Advertising & Action). With...

How PDI built an enterprise-grade RAG system for AI applications with...

PDI Technologies is a global leader in the convenience retail and petroleum wholesale industries. They help businesses around the globe increase efficiency and profitability...