GenAI News

Exploring the Real-Time Race Track with Amazon Nova

This post is co-written by Jake Friedman, President + Co-founder of Wildlife.

Amazon Nova is enhancing sports fan engagement through an immersive Formula 1 (F1)-inspired...

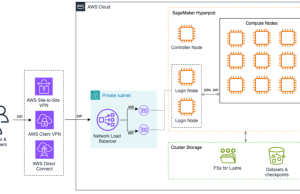

Accelerating HPC and AI research in universities with Amazon SageMaker HyperPod

This post was written with Mohamed Hossam of Brightskies.

Research universities engaged in large-scale AI and high-performance computing (HPC) often face significant infrastructure challenges that...

Build character consistent storyboards using Amazon Nova in Amazon Bedrock –...

The art of storyboarding stands as the cornerstone of modern content creation, weaving its essential role through filmmaking, animation, advertising, and UX design. Though...

Build character consistent storyboards using Amazon Nova in Amazon Bedrock –...

Although careful prompt crafting can yield good results, achieving professional-grade visual consistency often requires adapting the underlying model itself. Building on the prompt engineering...

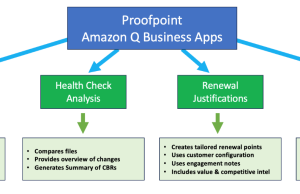

Unlocking the future of professional services: How Proofpoint uses Amazon Q...

This post was written with Stephen Coverdale and Alessandra Filice of Proofpoint.

At the forefront of cybersecurity innovation, Proofpoint has redefined its professional services by...

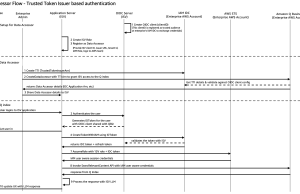

Authenticate Amazon Q Business data accessors using a trusted token issuer

Since its general availability in 2024, Amazon Q Business (Amazon Q) has enabled independent software vendors (ISVs) to enhance their Software as a Service...

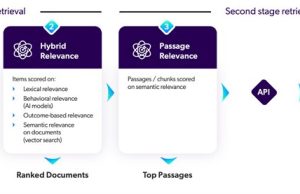

Enhancing LLM accuracy with Coveo Passage Retrieval on Amazon Bedrock

This post is co-written with Keith Beaudoin and Nicolas Bordeleau from Coveo.

As generative AI transforms business operations, enterprises face a critical challenge: how can...

Train and deploy models on Amazon SageMaker HyperPod using the new...

Training and deploying large AI models requires advanced distributed computing capabilities, but managing these distributed systems shouldn’t be complex for data scientists and machine...

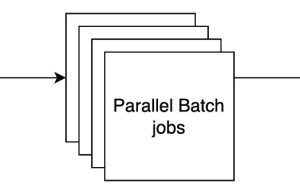

Build a serverless Amazon Bedrock batch job orchestration workflow using AWS...

As organizations increasingly adopt foundation models (FMs) for their artificial intelligence and machine learning (AI/ML) workloads, managing large-scale inference operations efficiently becomes crucial. Amazon...

Announcing the new cluster creation experience for Amazon SageMaker HyperPod

Today, Amazon SageMaker HyperPod is announcing a new one-click, validated cluster creation experience that accelerates setup and prevents common misconfigurations, so you can launch...