Data is your generative AI differentiator, and successful generative AI implementation depends on a robust data strategy incorporating a comprehensive data governance approach. Traditional data architectures often struggle to meet the unique demands of generative such as applications. An effective generative AI data strategy requires several key components like seamless integration of diverse data sources, real-time processing capabilities, comprehensive data governance frameworks that maintain data quality and compliance, and secure access patterns that respect organizational boundaries. In particular, Retrieval Augmented Generation (RAG) applications have emerged as one of the most promising developments in this space. RAG is the process of optimizing the output of a foundation model (FM), so it references a knowledge base outside of its training data sources before generating a response. Such systems require secure, scalable, and flexible data ingestion and access patterns to enterprise data.

In this post, we explore how to build a secure RAG application using serverless data lake architecture, an important data strategy to support generative AI development. We use Amazon Web Services (AWS) services including Amazon S3, Amazon DynamoDB, AWS Lambda, and Amazon Bedrock Knowledge Bases to create a comprehensive solution supporting unstructured data assets which can be extended to structured data. The post covers how to implement fine-grained access controls for your enterprise data and design metadata-driven retrieval systems that respect security boundaries. These approaches will help you maximize the value of your organization’s data while maintaining robust security and compliance.

Use case overview

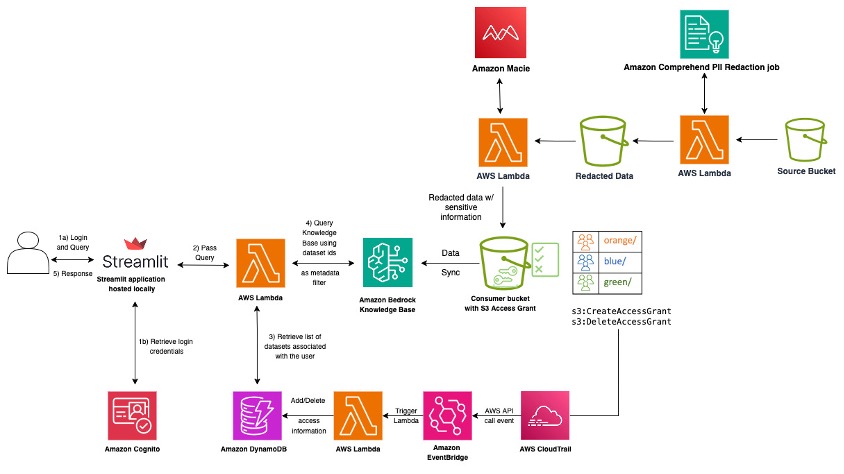

As an example, consider a RAG-based generative AI application. The following diagram shows the typical conversational workflow that is initiated with a user prompt, for example, operation specialists in a retail company querying internal knowledge to get procurement and supplier details. Each user prompt is augmented with relevant contexts from data residing in an enterprise data lake.

In the solution, the user interacts with the Streamlit frontend, which serves as the application interface. Amazon Cognito that enables IdP integration through IAM Identity Center, so that only authorized users can access the application. For production use, we recommend that you use a more robust frontend framework such as AWS Amplify, which provides a comprehensive set of tools and services for building scalable and secure web applications. After the user has successfully signed in, the application retrieves the list of datasets associated with the user’s ID from the DynamoDB table. The list of datasets is used to filter while querying the knowledge base to get answers from datasets the user is authorized to access. This is made possible because when the datasets are ingested, the knowledge base is prepopulated with metadata files containing user principal-dataset mapping stored in Amazon S3. The knowledge base returns the relevant results, which are then sent back to the application and displayed to the user.

The datasets reside in a serverless data lake on Amazon S3 and are governed using Amazon S3 Access Grants with IAM Identity Center trusted identity propagation enabling automated data permissions at scale. When an access grant is created or deleted for a user or group, the information is added to the DynamoDB table through event-driven architecture using AWS CloudTrail and Amazon EventBridge.

The workflow includes the following key data foundation steps:

- Access policies to extract permissions based on relevant data and filter out results based on the prompt user role and permissions.

- Enforce data privacy policies such as personally identifiable information (PII) redactions.

- Enforce fine-grained access control.

- Grant the user role permissions for sensitive information and compliance policies based on dataset classification in the data lake.

- Extract, transform, and load multimodal data assets into a vector store.

In the following sections, we explain why a modern data strategy is important for generative AI and what challenges it solves.

Serverless data lakes powering RAG applications

Organizations implementing RAG applications face several critical challenges that impact both functionality and cost-effectiveness. At the forefront is security and access control. Applications must carefully balance broad data access with strict security boundaries. These systems need to allow access to data sources by only authorized users, apply dynamic filtering based on permissions and classifications, and maintain security context throughout the entire retrieval and generation process. This comprehensive security approach helps prevent unauthorized information exposure while still enabling powerful AI capabilities.

Data discovery and relevance present another significant hurdle. When dealing with petabytes of enterprise data, organizations must implement sophisticated systems for metadata management and advanced indexing. These systems need to understand query context and intent while efficiently ranking retrieval results to make sure users receive the most relevant information. Without proper attention to these aspects, RAG applications risk returning irrelevant or outdated information that diminishes their utility.

Performance considerations become increasingly critical as these systems scale. RAG applications must maintain consistent low latency while processing large document collections, handling multiple concurrent users, integrating data from distributed sources and retrieving relevant data. The challenge of balancing real-time and historical data access adds another layer of complexity to maintaining responsive performance at scale.Cost management represents a final key challenge that organizations must address. Without careful architectural planning, RAG implementations can lead to unnecessary expenses through duplicate data storage, excessive vector database operations, and inefficient data transfer patterns. Organizations need to optimize their resource utilization carefully to help prevent these costs from escalating while maintaining system performance and functionality.

A modern data strategy addresses the complex challenges of RAG applications through comprehensive governance frameworks and robust architectural components. At its core, the strategy implements sophisticated governance mechanisms that go beyond traditional data management approaches. These frameworks enable AI systems to dynamically access enterprise information while maintaining strict control over data lineage, access patterns, and regulatory compliance. By implementing comprehensive provenance tracking, usage auditing, and compliance frameworks, organizations can operate their RAG applications within established ethical and regulatory boundaries.

Serverless data lakes serve as the foundational component of this strategy, offering an elegant solution to both performance and cost challenges. Their inherent scalability automatically handles varying workloads without requiring complex capacity planning, and pay-per-use pricing models facilitate cost efficiency. The ability to support multiple data formats—from structured to unstructured—makes them particularly well-suited for RAG applications that need to process and index diverse document types.

To address security and access control challenges, the strategy implements enterprise-level data sharing mechanisms. These include sophisticated cross-functional access controls and federated access management systems that enable secure data exchange across organizational boundaries. Fine-grained permissions at the row, column, and object levels enforce security boundaries while maintaining necessary data access for AI systems.Data discoverability challenges are met through centralized cataloging systems that help prevent duplicate efforts and enable efficient resource utilization. This comprehensive approach includes business glossaries, technical catalogs, and data lineage tracking, so that teams can quickly locate and understand available data assets. The catalog system is enriched with quality metrics that help maintain data accuracy and consistency across the organization.

Finally, the strategy implements a structured data classification framework that addresses security and compliance concerns. By categorizing information into clear sensitivity levels from public to restricted, organizations can create RAG applications that only retrieve and process information appropriate to user access levels. This systematic approach to data classification helps prevent unauthorized information disclosure while maintaining the utility of AI systems across different business contexts.Our solution uses AWS services to create a secure, scalable foundation for enterprise RAG applications. The components are explained in the following sections.

Data lake structure using Amazon S3

Our data lake will use Amazon S3 as the primary storage layer, organized with the following structure:

Each business domain has dedicated folders containing domain-specific data, with common data stored in a shared folder.

Data sharing options

There are two options for data sharing. The first option is Amazon S3 Access Points, which provide a dedicated access endpoint policy for different applications or user groups. This approach enables fine-grained control without modifying the base bucket policy.

The following code is an example access point configuration. This policy grants the RetailAnalyticsRole read-only access (GetObject and ListBucket permissions) to data in both the retail-specific directory and the shared directory, but it restricts access to other business domain directories. The policy is attached to a dedicated S3 access point, allowing users with this role to retrieve only data relevant to retail operations and commonly shared resources:

The second option for data sharing is using bucket policies with path-based access control. Bucket policies can implement path-based restrictions to control which user roles can access specific data directories.The following code is an example bucket policy. This bucket policy implements domain-based access control by granting different permissions based on user roles and data paths. The FinanceUserRole can only access data within the finance and shared directories, and the RetailUserRole can only access data within the retail and shared directories. This pattern enforces data isolation between business domains while facilitating access to common resources. Each role is limited to read-only operations (GetObject and ListBucket) on their authorized directories, which means users can only retrieve data relevant to their business functions.

As your number of datasets and use cases scale, you might require more policy space. Bucket policies work as long as the necessary policies fit within the policy size limits of S3 bucket policies (20 KB), AWS Identity and Access Management (IAM) policies (5 KB), and within the number of IAM principals allowed per account. With an increasing number of datasets, access points offer a better alternative of having a dedicated policy for each access point in such cases. You can define quite granular access control patterns because you can have thousands of access points per AWS Region per account, with a policy up to 20 KB in size for each access point. Although S3 Access Points increases the amount of policy space available, it requires a mechanism for clients to discover the right access point for the right dataset. To manage scale, S3 Access Points provides a simplified model to map identities in directories such as Active Directory or IAM principals to datasets in Amazon S3 by prefix, bucket, or object. With the simplified access scheme in S3 Access Grants, you can grant read-only, write-only, or read-write access according to Amazon S3 prefix to both IAM principals and directly to users or groups from a corporate directory. As a result, you can manage automated data permissions at scale.

Amazon Comprehend PII redaction job identifies and redacts (or masks) sensitive data in documents residing in Amazon S3. After redaction, documents are verified for redaction effectiveness using Amazon Macie. Documents flagged by Macie are sent to another bucket for manual review, and cleared documents are moved to a redacted bucket ready for ingestion. For more details, refer to Protect sensitive data in RAG applications with Amazon Comprehend.

User-dataset mapping with DynamoDB

To dynamically manage access permissions, you can use DynamoDB to store mapping information between users or roles and datasets. You can automate the mapping from AWS Lake Formation to DynamoDB using CloudTrail and event-driven Lambda invocation. The DynamoDB structure consists of a table named UserDatasetAccess. Its primary key structure is:

- Partition key –

UserIdentifier(string) – IAM role Amazon Resource Name (ARN) or user ID - Sort key –

DatasetID(string) – Unique identifier for each dataset

Additional attributes consist of:

DatasetPath(string) – S3 path to the datasetAccessLevel(string) – READ, WRITE, or ADMINClassification(string) – PUBLIC, INTERNAL, CONFIDENTIAL, RESTRICTEDDomain(string) – Business domain (such as retail or finance)ExpirationTime(number) – Optional Time To Live (TTL) for temporary access

The following DynamoDB item represents an access mapping between a user role (RetailAnalyst) and a specific dataset (retail-products). It defines that this role has READ access to product catalog data in the retail domain with an INTERNAL security classification. When the RAG application processes a query, it references this mapping to determine which datasets the querying user can access, and the application only retrieves and uses data appropriate for the user’s permissions level.

This approach provides a flexible, programmatic way to control which users can access specific datasets, enabling fine-grained permission management for RAG applications.

Amazon Bedrock Knowledge Bases for unstructured data

Amazon Bedrock Knowledge Bases provides a managed solution for organizing, indexing, and retrieving unstructured data to support RAG applications. For our solution, we use this service to create domain-specific knowledge bases. With the metadata filtering feature provided by Amazon Bedrock Knowledge Bases, you can retrieve not only semantically relevant chunks but a well-defined subset of those relevant chunks based on applied metadata filters and associated values. In the next sections, we show how you can set this up.

Configuring knowledge bases with metadata filtering

We organize our knowledge bases to support filtering based on:

- Business domain (such as finance, retail, or supply-chain)

- Security classification (such as public, internal, confidential, or restricted)

- Document type (such as policy, report, or guide)

Each document ingested into our knowledge base includes a standardized metadata structure:

Code examples shown throughout this post are for reference only and highlight key API calls and logic. Additional implementation code is required for production deployments.

Amazon Bedrock Knowledge Bases API integration

To demonstrate how our RAG application will interact with the knowledge base, here’s a Python sample using the AWS SDK:

Conclusion

In this post, we’ve explored how to build a secure RAG application using a serverless data lake architecture. The approach we’ve outlined provides several key advantages:

- Security-first design – Fine-grained access controls at scale mean that users only access data they’re authorized for

- Scalability – Serverless components automatically handle varying workloads

- Cost-efficiency – Pay-as-you-go pricing models optimize expenses

- Flexibility – Seamless adaptation to different business domains and use cases

By implementing a modern data strategy with proper governance, security controls, and serverless architecture, organizations can make the most of their data assets for generative AI applications while maintaining security and compliance.The RAG architecture we’ve described enables contextualized, accurate responses that respect security boundaries, providing a powerful foundation for enterprise AI applications across diverse business domains.For next steps, consider implementing monitoring and observability to track performance and usage patterns.

For performance and usage monitoring:

- Deploy Amazon CloudWatch metrics and dashboards to track key performance indicators such as query latency, throughput, and error rates

- Set up CloudWatch Logs Insights to analyze usage patterns and identify optimization opportunities

- Implement AWS X-Ray tracing to visualize request flows across your serverless components

For security monitoring and defense:

- Enable Amazon GuardDuty to detect potential threats targeting your S3 data lake, Lambda functions, and other application resources

- Implement Amazon Inspector for automated vulnerability assessments of your Lambda functions and container images

- Configure AWS Security Hub to consolidate security findings and measure cloud security posture across your RAG application resources

- Use Amazon Macie for continuous monitoring of S3 data lake contents to detect sensitive data exposures

For authentication and activity auditing:

- Analyze AWS CloudTrail logs to audit API calls across your application stack

- Implement CloudTrail Lake to create SQL-queryable datasets for security investigations

- Enable Amazon Cognito advanced security features to detect suspicious sign-in activities

For data access controls:

- Set up CloudWatch alarms to send alerts about unusual data access patterns

- Configure AWS Config rules to monitor for compliance with access control best practices

- Implement AWS IAM Access Analyzer to identify unintended resource access

Other important considerations include:

- Adding feedback loops to continuously improve retrieval quality

- Exploring multi-Region deployment for improved resilience

- Implementing caching layers to optimize frequently accessed content

- Extending the solution to support structured data assets using AWS Glue and AWS Lake Formation for data transformation and data access

With these foundations in place, your organization will be well-positioned to use generative AI technologies securely and effectively across the enterprise.

About the authors

Venkata Sistla is a Senior Specialist Solutions Architect in the Worldwide team at Amazon Web Services (AWS), with over 12 years of experience in cloud architecture. He specializes in designing and implementing enterprise-scale AI/ML systems across financial services, healthcare, mining and energy, independent software vendors (ISVs), sports, and retail sectors. His expertise lies in helping organizations transform their data challenges into competitive advantages through innovative cloud solutions while mentoring teams and driving technological excellence. He focuses on architecting highly scalable infrastructures that accelerate machine learning initiatives and deliver measurable business outcomes.

Venkata Sistla is a Senior Specialist Solutions Architect in the Worldwide team at Amazon Web Services (AWS), with over 12 years of experience in cloud architecture. He specializes in designing and implementing enterprise-scale AI/ML systems across financial services, healthcare, mining and energy, independent software vendors (ISVs), sports, and retail sectors. His expertise lies in helping organizations transform their data challenges into competitive advantages through innovative cloud solutions while mentoring teams and driving technological excellence. He focuses on architecting highly scalable infrastructures that accelerate machine learning initiatives and deliver measurable business outcomes.

Aamna Najmi is a Senior GenAI and Data Specialist in the Worldwide team at Amazon Web Services (AWS). She assists customers across industries and Regions in operationalizing and governing their generative AI systems at scale, ensuring they meet the highest standards of performance, safety, and ethical considerations, bringing a unique perspective of modern data strategies to complement the field of AI. In her spare time, she pursues her passion of experimenting with food and discovering new places.

Aamna Najmi is a Senior GenAI and Data Specialist in the Worldwide team at Amazon Web Services (AWS). She assists customers across industries and Regions in operationalizing and governing their generative AI systems at scale, ensuring they meet the highest standards of performance, safety, and ethical considerations, bringing a unique perspective of modern data strategies to complement the field of AI. In her spare time, she pursues her passion of experimenting with food and discovering new places.