The education sector needs efficient, high-quality course material development that can keep pace with rapidly evolving knowledge domains. Faculty invest days to create content and quizzes for topics to be taught in weeks. Increased faculty engagement in manual content creation creates a time deficit for innovation in teaching, inconsistent course material, and a poor experience for both faculty and students.

Generative AI–powered systems can significantly reduce the time and effort faculty spend on course material development while improving educational quality. Automating content creation tasks gives educators more time for interactive teaching and creative classroom strategies.

The solution in this post addresses this challenge by using large language models (LLMs), specifically Anthropic’s Claude 3.5 through Amazon Bedrock, for educational content creation. This AI-powered approach supports the automated generation of structured course outlines and detailed content, reducing development cycles from days to hours while ensuring materials remain current and comprehensive. This technical exploration demonstrates how institutions can use advanced AI capabilities to transform their educational content development process, making it more efficient, scalable, and responsive to modern learning needs.

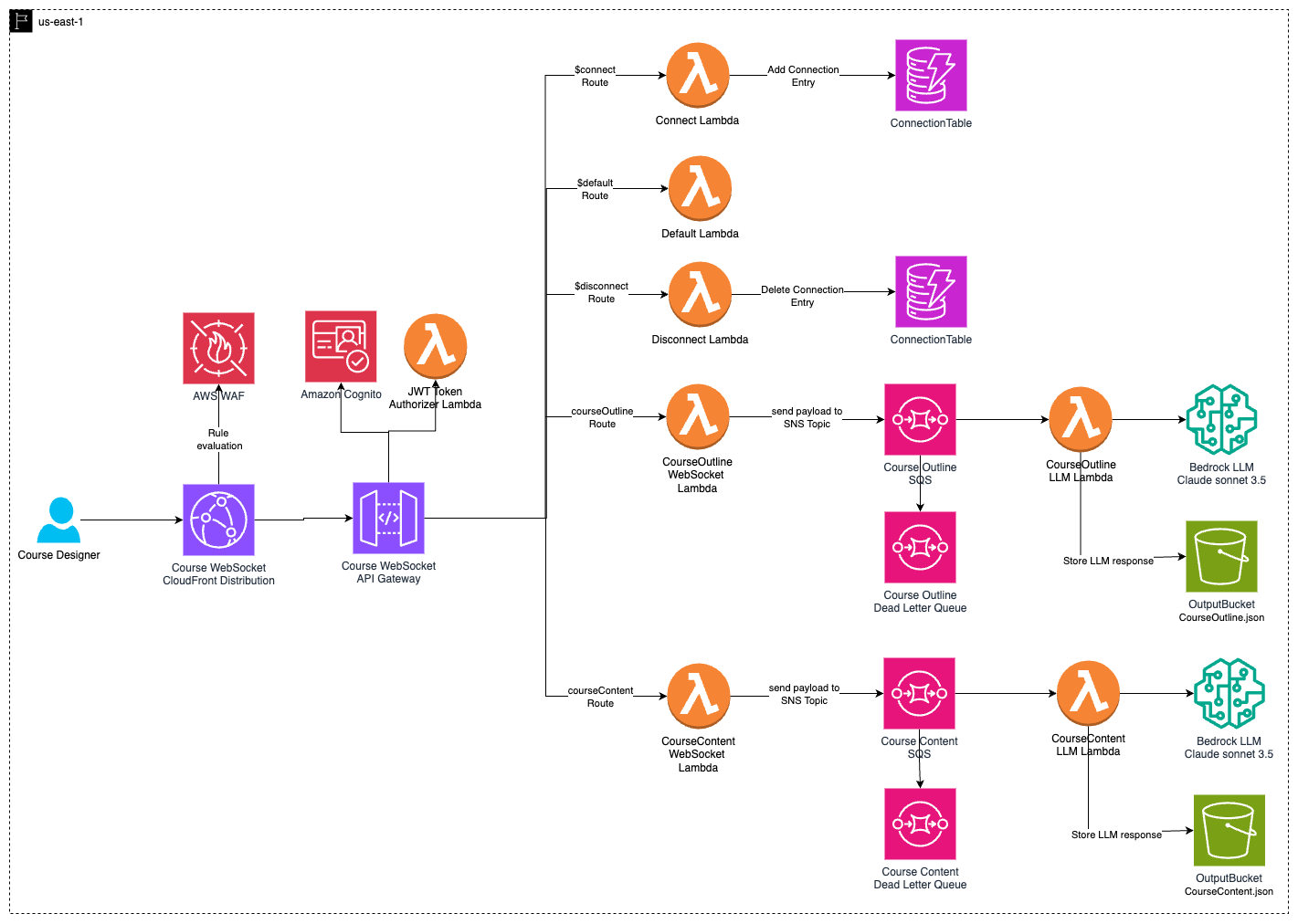

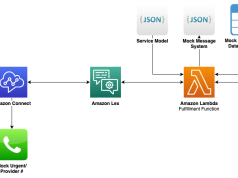

The solution uses Amazon Simple Queue Service (Amazon SQS), AWS Lambda, Amazon Bedrock, Amazon API Gateway WebSocket APIs, Amazon Simple Storage Service (Amazon S3), Amazon CloudFront, Amazon DynamoDB, Amazon Cognito and AWS WAF. The architecture is designed following the AWS Well-Architected Framework, facilitating robustness, scalability, cost-optimization, high performance, and enhanced security.

In this post, we explore each component in detail, along with the technical implementation of the two core modules: course outline generation and course content generation. Course outline generates course structure for a subject with module and submodules by week. Primary and secondary outcomes are generated in a hierarchical structure by week and by semester. Content generation is content generated for the module and submodule generated in content outline. Content generated includes text and video scripts with corresponding multiple-choice questions.

Solution overview

The solution architecture integrates the two core modules through WebSocket APIs. This design is underpinned by using AWS Lambda function for serverless compute, Amazon Bedrock for AI model integration, and Amazon SQS for reliable message queuing.

The system’s security uses multilayered approach, combining Amazon Cognito for user authentication, AWS WAF for threat mitigation, and a Lambda authorizers function for fine-grained access control. To optimize performance and enhance user experience, AWS WAF is deployed to filter out malicious traffic and help protect against common web vulnerabilities. Furthermore, Amazon CloudFront is implemented as a WebSocket distribution layer, to significantly improve content delivery speeds and reduce latency for end users. This comprehensive architecture creates a secure, scalable, and high-performance system for generating and delivering educational content.

WebSocket API and authentication mechanisms

Course WebSocket API manages real-time interactions for course outline and content generation. WebSockets enable streaming AI responses and real-time interactions, reducing latency and improving user responsiveness compared to traditional REST APIs. They also support scalable concurrency, allowing parallel processing of multiple requests without overwhelming system resources. AWS WAF provides rule-based filtering to help protect against web-based threats before traffic reaches API Gateway. Amazon CloudFront enhances performance and security by distributing WebSocket traffic globally. Amazon Cognito and JWT Lambda authorizer function handles authentication, validation of user identity before allowing access.

Each WebSocket implements three primary routes:

- $connect – Triggers a Lambda function to log the

connection_idin DynamoDB. This enables tracking of active connections, targeted messaging, and efficient connection management, supporting real-time communication and scalability across multiple server instances. - $disconnect – Logs the disconnection in DynamoDB to remove the

connection_idrecord from DynamoDB table. This facilitates proper cleanup of inactive connections, helps prevent resource waste, maintains an accurate list of active clients, and helps optimize system performance and resource allocation. - $default – Handles unexpected or invalid traffic.

WebSocket authentication using Amazon Cognito

The WebSocket API integrates Amazon Cognito for authentication and uses a JWT-based Lambda authorizer function for token validation. The authentication flow follows these steps:

- User authentication

- The course designer signs in using Amazon Cognito, which issues a JWT access token upon successful authentication.

- Amazon Cognito supports multiple authentication methods, including username-password login, social identity providers (such as Google or Facebook), and SAML-based federation.

- WebSocket connection request

- When a user attempts to connect to the WebSocket API, the client includes the JWT access token in the WebSocket request headers.

- JWT token validation (Lambda authorizer function)

- The JWT token authorizer Lambda function extracts and verifies the token against the Amazon Cognito public key.

- If the token is valid, the request proceeds. If the token isn’t valid, the connection is rejected.

- Maintaining user sessions

- Upon successful authentication, the $connect route Lambda function stores the

connection_idand user details in DynamoDB, allowing targeted messaging. - When the user disconnects, the $disconnect Lambda function removes the

connection_idto maintain an accurate session record.

- Upon successful authentication, the $connect route Lambda function stores the

The following is a sample AWS CDK code to set up the WebSocket API with Amazon Cognito. AWS CDK is an open source software development framework to define cloud infrastructure in code and provision it through AWS CloudFormation. The following code is written in Python. For more information, refer to Working with the AWS CDK in Python:

Course outline generation

The course outline generation module helps course designers create a structured course outline. For this proof of concept, the default structure spans 4 weeks, with each week containing three main learning outcomes and supporting secondary outcomes, but it can be changed according to each course or institution’s reality. The module follows this workflow:

- The course designer submits a prompt using the course WebSocket (

courseOutlineroute). CourseOutlineWSLambdasends the request to an SQS queue for asynchronous processing.- The SQS queue triggers

CourseOutlineLLMLambda, which invokes Anthropic’s Claude 3.5 Sonnet in Amazon Bedrock to generate the outline. - The response is structured using Pydantic models and returned as JSON.

- The structured outline is stored in an S3

OutputBucket, with a finalized version stored in a portal bucket for faculty review.

The following code sample is a sample payload for the courseOutline route, which can be customized to meet institutional requirements. The fields are defined as follows:

- action – Specifies the operation to be performed (

courseOutline). - is_streaming – Indicates whether the response should be streamed (

yesfor real-time streaming andnofor single output at one time). - s3_input_uri_list – A list of S3 URIs containing reference materials (which can be left empty if not available).

- course_title – The title of the course for which the outline is being generated.

- course_duration – The total number of weeks for the course.

- user_prompt – A structured prompt guiding the AI to generate a detailed course outline based on syllabus information, providing a well-organized weekly learning structure. If using a different LLM, optimize the

user_promptfor that model to achieve the best results.

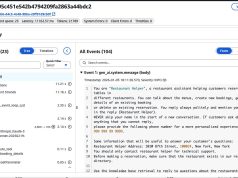

When interacting with the courseOutline route of the WebSocket API, the response follows a structured format that details the course outline and structure. The following is an example of a WebSocket response for a course. This format is designed for straightforward parsing and seamless integration into your applications:

Here’s a snippet of the Lambda function for processing the outline request:

Course content generation

The course content generation module creates detailed week-by-week content based on the course outline. Although the default configuration generates the following for each main learning outcome, these outputs are fully customizable to meet specific course needs and institutional preferences:

- One set of reading materials

- Three video scripts (3 minutes each)

- A quiz with a multiple-choice question for each video

The module follows this workflow:

- The course designer submits learning outcomes using the

courseContentroute. CourseContentWSLambdafunction sends the request to an SQS queue.- The SQS queue triggers

CourseContentLLMLambdafunction, which calls Amazon Bedrock to generate the content. - The generated content is structured and stored in Amazon S3.

The following is a sample payload for the courseContent route, which can be customized to align with institutional requirements. The fields are defined as follows:

- action – Specifies the operation to be performed (

courseContent). - is_streaming – Determines the response mode (

yesfor real-time streaming andnofor a single output at one time). - s3_input_uri_list – An array of S3 URIs containing additional course materials which will be used to generate course content (optional).

- week_number – Indicates the week number for which content is being generated.

- course_title – The title of the course.

- main_learning_outcome – The primary learning objective for the specified week.

- sub_learning_outcome_list – A list of supporting learning outcomes to be covered.

- user_prompt – A structured instruction guiding the LLM to generate week-specific course content, facilitating comprehensive coverage. If switching to a different LLM, optimize the

user_promptfor optimal performance.

When interacting with the courseContent route of the WebSocket API, the response follows a structured format that details the course content. The following is an example of a WebSocket response for a course content. This format is designed for easy parsing and seamless integration into your applications:

Here’s a Lambda function code snippet for content generation:

Prerequisites

To implement the solution provided in this post, you should have the following:

- An active AWS account and familiarity with foundation models (FMs) and Amazon Bedrock. Enable model access for Anthropic’s Claude 3.5v2 Sonnet and Anthropic’s Claude 3.5 Haiku

- The AWS Cloud Development Kit (AWS CDK) already set up. For installation instructions, refer to the AWS CDK workshop.

- When deploying the CDK stack, select a Region where Anthropic’s Claude models in Amazon Bedrock are available. Although this solution uses the US West (Oregon)

us-west-2Region, you can choose a different Region but you need to verify that it supports Anthropic’s Claude models in Amazon Bedrock before proceeding. The Region you use to access the model must match the Region where you deploy your stack.

Set up the solution

When the prerequisite steps are complete, you’re ready to set up the solution:

- Clone the repository:

- Navigate to the project directory:

- Create and activate the virtual environment:

The activation of the virtual environment differs based on the operating system; refer to the AWS CDK workshop for activating in other environments.

- After the virtual environment is activated, you can install the required dependencies:

- Review and modify the

project_config.jsonfile to customize your deployment settings - In your terminal, export your AWS credentials for a role or user in ACCOUNT_ID. The role needs to have all necessary permissions for CDK deployment:

export AWS_REGION=”<region>” # Same region as ACCOUNT_REGION above

export AWS_ACCESS_KEY_ID=”<access-key>” # Set to the access key of your role/user

export AWS_SECRET_ACCESS_KEY=”<secret-key>” # Set to the secret key of your role/user

- If you’re deploying the AWS CDK for the first time, invoke the following command:

- Deploy the stacks:

Note the CloudFront endpoints, WebSocket API endpoints, and Amazon Cognito user pool details from deployment outputs.

- Create a user in the Amazon Cognito user pool using the AWS Management Console or AWS Command Line Interface (AWS CLI). Alternatively, you can use the cognito-user-token-helper repository to quickly create a new Amazon Cognito user and generate JSON Web Tokens (JWTs) for testing.

- Connect to the WebSocket endpoint using wscat.

Scalability and security considerations

The solution is designed with scalability and security as core principles. Because Amazon API Gateway for WebSockets doesn’t inherently support AWS WAF, we’ve integrated Amazon CloudFront as a distribution layer and applied AWS WAF to enhance security.

By using Amazon SQS and AWS Lambda, the system enables asynchronous processing, supports high concurrency, and dynamically scales to handle varying workloads. AWS WAF helps to protects against malicious traffic and common web-based threats. Amazon CloudFront can improve global performance, reduce latency, and provide built-in DDoS protection. Amazon Cognito handles authentication so that only authorized users can access the WebSocket API. AWS IAM policies enforce strict access control to secure resources such as Amazon Bedrock, Amazon S3, AWS Lambda, and Amazon DynamoDB.

Clean up

To avoid incurring future charges on the AWS account, invoke the following command in the terminal to delete the CloudFormation stack provisioned using the AWS CDK:

Conclusion

This innovative solution represents a significant leap forward in educational technology, demonstrating how AWS services can be used in course development. By integrating Amazon Bedrock, AWS Lambda, WebSockets, and a robust suite of AWS services, we’ve built a system that streamlines content creation, enhances real-time interactivity, and facilitates secure, scalable, and high-quality learning experiences.

By developing comprehensive course materials rapidly, course designers can focus more on personalized instruction and student mentoring. AI-assisted generation facilitates high-quality, standardized content across courses. The event-driven architecture scales effortlessly to meet institutional demands, and CloudFront, AWS WAF, and Amazon Cognito support secure and optimized content delivery. Institutions adopting this technology position themselves at the forefront of educational innovation, redefining modern learning environments.

This solution goes beyond simple automation—it means teachers and professors can shift their focus from manual content creation to high-impact teaching and mentoring. By using AWS AI and cloud technologies, institutions can enhance student engagement, optimize content quality, and scale seamlessly.

We invite you to explore how this solution can transform your institution’s approach to course creation and student engagement. To learn more about implementing this system or to discuss custom solutions for your specific needs, contact your AWS account team or an AWS education specialist.

Together, let’s build the future of education on the cloud.

About the authors

Dinesh Mane is a Senior ML Prototype Architect at AWS, specializing in machine learning, generative AI, and MLOps. In his current role, he helps customers address real-world, complex business problems by developing machine learning and generative AI solutions through rapid prototyping.

Dinesh Mane is a Senior ML Prototype Architect at AWS, specializing in machine learning, generative AI, and MLOps. In his current role, he helps customers address real-world, complex business problems by developing machine learning and generative AI solutions through rapid prototyping.

Tasneem Fathima is Senior Solutions Architect at AWS. She supports Higher Education and Research customers in the United Arab Emirates to adopt cloud technologies, improve their time to science, and innovate on AWS.

Tasneem Fathima is Senior Solutions Architect at AWS. She supports Higher Education and Research customers in the United Arab Emirates to adopt cloud technologies, improve their time to science, and innovate on AWS.

Amir Majlesi leads the EMEA prototyping team within AWS Worldwide Specialist Organization. Amir has extensive experiences in helping customers accelerate adoption of cloud technologies, expedite path to production and catalyze a culture of innovation. He enables customer teams to build cloud native applications using agile methodologies, with a focus on emerging technologies such as Generative AI, Machine Learning, Analytics, Serverless and IoT.

Amir Majlesi leads the EMEA prototyping team within AWS Worldwide Specialist Organization. Amir has extensive experiences in helping customers accelerate adoption of cloud technologies, expedite path to production and catalyze a culture of innovation. He enables customer teams to build cloud native applications using agile methodologies, with a focus on emerging technologies such as Generative AI, Machine Learning, Analytics, Serverless and IoT.