Gen AI News Talk

TII Falcon-H1 models now available on Amazon Bedrock Marketplace and Amazon SageMaker JumpStart

This post was co-authored with Jingwei Zuo from TII.

We are excited to announce the availability of the Technology Innovation Institute (TII)’s Falcon-H1 models on Amazon...

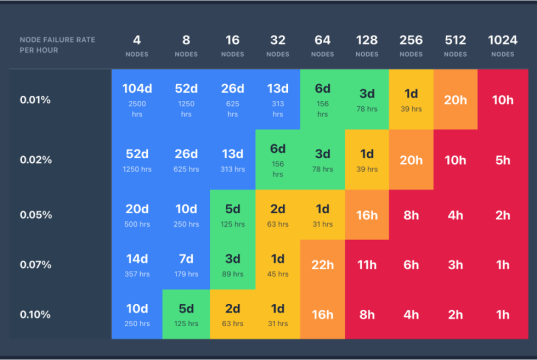

Powering innovation at scale: How AWS is tackling AI infrastructure challenges

As generative AI continues to transform how enterprises operate—and develop net new innovations—the infrastructure demands for training and deploying AI models have grown exponentially....

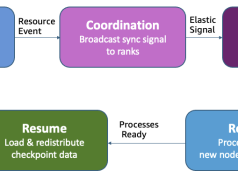

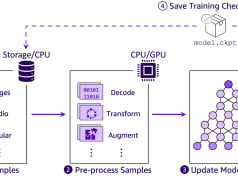

Accelerate your model training with managed tiered checkpointing on Amazon SageMaker HyperPod

As organizations scale their AI infrastructure to support trillion-parameter models, they face a difficult trade-off: reduced training time with lower cost or faster training...

Maximize HyperPod Cluster utilization with HyperPod task governance fine-grained quota allocation

We are excited to announce the general availability of fine-grained compute and memory quota allocation with HyperPod task governance. With this capability, customers can optimize Amazon...

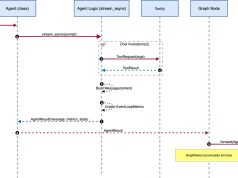

Skai uses Amazon Bedrock Agents to significantly improve customer insights by revolutionized data access...

This post was written with Lior Heber and Yarden Ron of Skai.

Skai (formerly Kenshoo) is an AI-driven omnichannel advertising and analytics platform designed for...

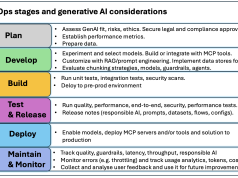

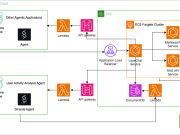

Build and scale adoption of AI agents for education with Strands Agents, Amazon Bedrock...

Basic AI chat isn’t enough for most business applications. Institutions need AI that can pull from their databases, integrate with their existing tools, handle...

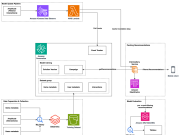

The power of AI in driving personalized product discovery at Snoonu

This post was written with Felipe Monroy, Ana Jaime, and Nikita Gordeev from Snoonu.

Managing a massive product catalog in the ecommerce space has introduced...

Exploring the Real-Time Race Track with Amazon Nova

This post is co-written by Jake Friedman, President + Co-founder of Wildlife.

Amazon Nova is enhancing sports fan engagement through an immersive Formula 1 (F1)-inspired...

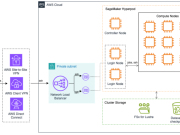

Accelerating HPC and AI research in universities with Amazon SageMaker HyperPod

This post was written with Mohamed Hossam of Brightskies.

Research universities engaged in large-scale AI and high-performance computing (HPC) often face significant infrastructure challenges that...

Build character consistent storyboards using Amazon Nova in Amazon Bedrock – Part 1

The art of storyboarding stands as the cornerstone of modern content creation, weaving its essential role through filmmaking, animation, advertising, and UX design. Though...