Customers today expect to find products quickly and efficiently through intuitive search functionality. A seamless search journey not only enhances the overall user experience, but also directly impacts key business metrics such as conversion rates, average order value, and customer loyalty. According to a McKinsey study, 78% of consumers are more likely to make repeat purchases from companies that provide personalized experiences. As a result, delivering exceptional search functionality has become a strategic differentiator for modern ecommerce services. With ever expanding product catalogs and increasing diversity of brands, harnessing advanced search technologies is essential for success.

Semantic search enables digital commerce providers to deliver more relevant search results by going beyond keyword matching. It uses an embeddings model to create vector embeddings that capture the meaning of the input query. This helps the search be more resilient to phrasing variations and to accept multimodal inputs such as text, image, audio, and video. For example, a user inputs a query containing text and an image of a product they like, and the search engine translates both into vector embeddings using a multimodal embeddings model and retrieves related items from the catalog using embeddings similarities. To learn more about semantic search and how Amazon Prime Video uses it to help customers find their favorite content, see Amazon Prime Video advances search for sports using Amazon OpenSearch Service.

While semantic search provides contextual understanding and flexibility, keyword search remains a crucial component for a comprehensive ecommerce search solution. At its core, keyword search provides the essential baseline functionality of accurately matching user queries to product data and metadata, making sure explicit product names, brands, or attributes can be reliably retrieved. This matching capability is vital, because users often have specific items in mind when initiating a search, and meeting these explicit needs with precision is important to deliver a satisfactory experience.

Hybrid search combines the strengths of keyword search and semantic search, enabling retailers to deliver more accurate and relevant results to their customers. Based on OpenSearch blog post, hybrid search improves result quality by 8–12% compared to keyword search and by 15% compared to natural language search. However, combining keyword search and semantic search presents significant complexity because different query types provide scores on different scales. Using Amazon OpenSearch Service hybrid search, customers can seamlessly integrate these approaches by combining relevance scores from multiple search types into one unified score.

OpenSearch Service is the AWS recommended vector database for Amazon Bedrock. It’s a fully managed service that you can use to deploy, operate, and scale OpenSearch on AWS. OpenSearch is a distributed open-source search and analytics engine composed of a search engine and vector database. OpenSearch Service can help you deploy and operate your search infrastructure with native vector database capabilities delivering as low as single-digit millisecond latencies for searches across billions of vectors, making it ideal for real-time AI applications. To learn more, see Improve search results for AI using Amazon OpenSearch Service as a vector database with Amazon Bedrock.

Multimodal embedding models like Amazon Titan Multimodal Embeddings G1, available through Amazon Bedrock, play a critical role in enabling hybrid search functionality. These models generate embeddings for both text and images by representing them in a shared semantic space. This allows systems to retrieve relevant results across modalities such as finding images using text queries or combining text with image inputs.

In this post, we walk you through how to build a hybrid search solution using OpenSearch Service powered by multimodal embeddings from the Amazon Titan Multimodal Embeddings G1 model through Amazon Bedrock. This solution demonstrates how you can enable users to submit both text and images as queries to retrieve relevant results from a sample retail image dataset.

Overview of solution

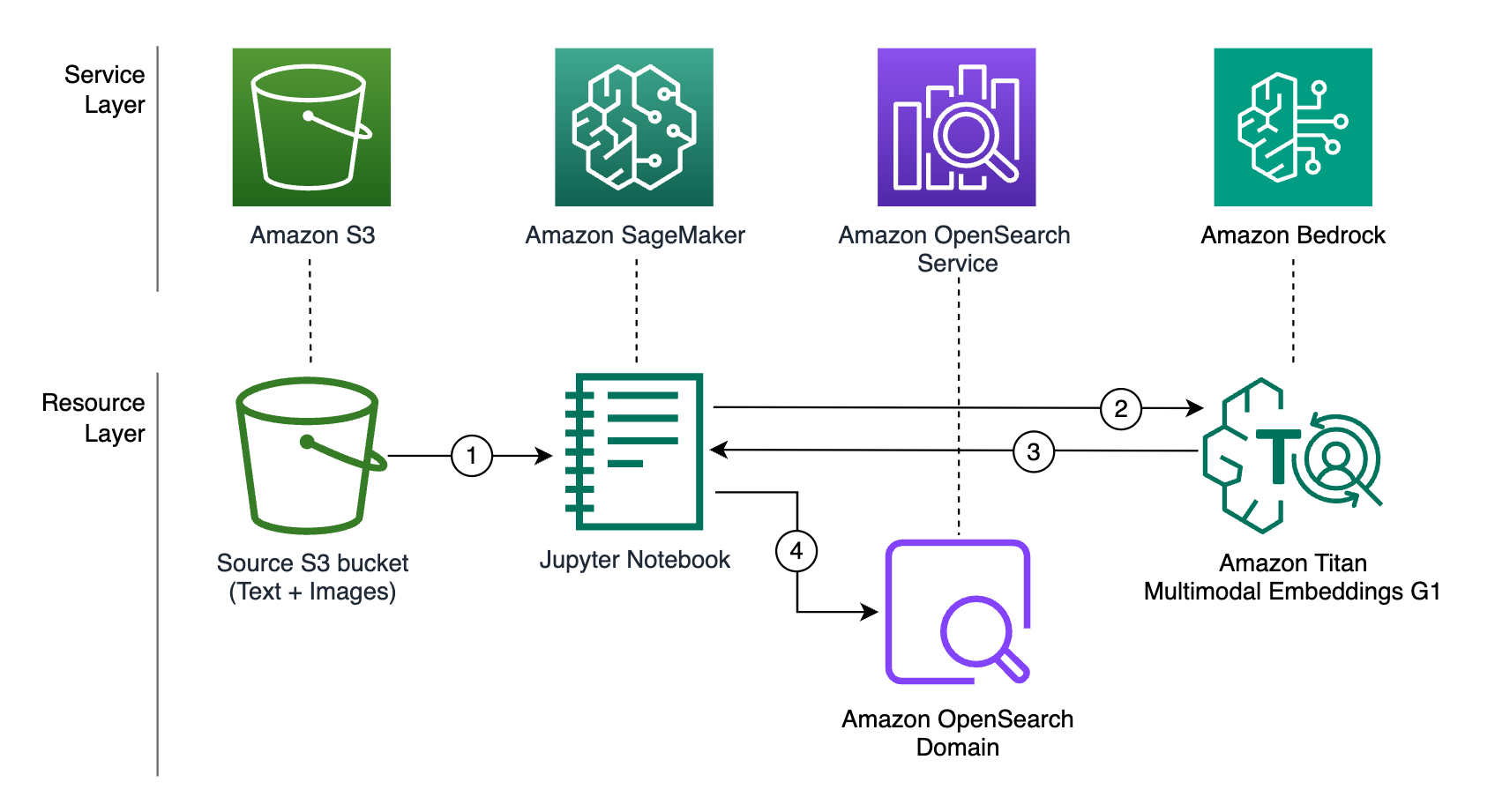

In this post, you will build a solution that you can use to search through a sample image dataset in the retail space, using a multimodal hybrid search system powered by OpenSearch Service. This solution has two key workflows: a data ingestion workflow and a query workflow.

Data ingestion workflow

The data ingestion workflow generates vector embeddings for text, images, and metadata using Amazon Bedrock and the Amazon Titan Multimodal Embeddings G1 model. Then, it stores the vector embeddings, text, and metadata in an OpenSearch Service domain.

In this workflow, shown in the following figure, we use a SageMaker JupyterLab notebook to perform the following actions:

- Read text, images, and metadata from an Amazon Simple Storage Service (Amazon S3) bucket, and encode images in Base64 format.

- Send the text, images, and metadata to Amazon Bedrock using its API to generate embeddings using the Amazon Titan Multimodal Embeddings G1 model.

- The Amazon Bedrock API replies with embeddings to the Jupyter notebook.

- Store both the embeddings and metadata in an OpenSearch Service domain.

Query workflow

In the query workflow, an OpenSearch search pipeline is used to convert the query input to embeddings using the embeddings model registered with OpenSearch. Then, within the OpenSearch search pipeline results processor, results of semantic search and keyword search are combined using the normalization processor to provide relevant search results to users. Search pipelines take away the heavy lifting of building score results normalization and combination outside your OpenSearch Service domain.

The workflow consists of the following steps shown in the following figure:

- The client submits a query input containing text, a Base64 encoded image, or both to OpenSearch Service. Text submitted is used for both semantic and keyword search, and the image is used for semantic search.

- The OpenSearch search pipeline performs the keyword search using textual inputs and a neural search using vector embeddings generated by Amazon Bedrock using Titan Multimodal Embeddings G1 model.

- The normalization processor within the pipeline scales search results using techniques like

min_maxand combines keyword and semantic scores usingarithmetic_mean. - Ranked search results are returned to the client.

Walkthrough overview

To deploy the solution, complete the following high-level steps:

- Create a connector for Amazon Bedrock in OpenSearch Service.

- Create an OpenSearch search pipeline and enable hybrid search.

- Create an OpenSearch Service index for storing the multimodal embeddings and metadata.

- Ingest sample data to the OpenSearch Service index.

- Create OpenSearch Service query functions to test search functionality.

Prerequisites

For this walkthrough, you should have the following prerequisites:

- An AWS account.

- Amazon Bedrock with Amazon Titan Multimodal Embeddings G1 enabled. For more information, see Access Amazon Bedrock foundation models.

- An OpenSearch Service domain. For instructions, see Getting started with Amazon OpenSearch Service.

- An Amazon SageMaker notebook. For instructions, see Quick setup for Amazon SageMaker.

- Familiarity with AWS Identity and Access Management (IAM), Amazon Elastic Compute Cloud (Amazon EC2), OpenSearch Service, and SageMaker.

- Familiarity with Python programming language.

The code is open source and hosted on GitHub.

Create a connector for Amazon Bedrock in OpenSearch Service

To use OpenSearch Service machine learning (ML) connectors with other AWS services, you need to set up an IAM role allowing access to that service. In this section, we demonstrate the steps to create an IAM role and then create the connector.

Create an IAM role

Complete the following steps to set up an IAM role to delegate Amazon Bedrock permissions to OpenSearch Service:

- Add the following policy to the new role to allow OpenSearch Service to invoke the Amazon Titan Multimodal Embeddings G1 model:

{ "Version": "2012-10-17", "Statement": [ { "Effect": "Allow", "Action": "bedrock:InvokeModel", "Resource": "arn:aws:bedrock:region:account-id:foundation-model/amazon.titan-embed-image-v1" } ] }

- Modify the role trust policy as follows. You can follow the instructions in IAM role management to edit the trust relationship of the role.

{ "Version": "2012-10-17", "Statement": [ { "Effect": "Allow", "Principal": { "Service": "opensearchservice.amazonaws.com" }, "Action": "sts:AssumeRole" } ] }

Connect an Amazon Bedrock model to OpenSearch

After you create the role, you can use the Amazon Resource Name (ARN) of the role to define the constant in the SageMaker notebook along with the OpenSearch domain endpoint. Complete the following steps:

- Register a model group. Note the model group ID returned in the response to register a model in a later step.

- Create a connector, which facilitates registering and deploying external models in OpenSearch. The response will contain the connector ID.

- Register the external model to the model group and deploy the model. In this step, you register and deploy the model at the same time—by setting up

deploy=true, the registered model is deployed as well.

Create an OpenSearch search pipeline and enable hybrid search

A search pipeline runs inside the OpenSearch Service domain and can have three types of processors: search request processor, search response processor, and search phase result processor. For our search pipeline, we use the search phase result processor, which runs between the search phases at the coordinating node level. The processor uses the normalization processor and normalizes the score from keyword and semantic search. For hybrid search, min-max normalization and arithmetic_mean combination techniques are preferred, but you can also try L2 normalization and geometric_mean or harmonic_mean combination techniques depending on your data and use case.

payload={

"phase_results_processors": [

{

"normalization-processor": {

"normalization": {

"technique": "min_max"

},

"combination": {

"technique": "arithmetic_mean",

"parameters": {

"weights": [

OPENSEARCH_KEYWORD_WEIGHT,

1 - OPENSEARCH_KEYWORD_WEIGHT

]

}

}

}

}

]

}

response = requests.put(

url=f"{OPENSEARCH_ENDPOINT}/_search/pipeline/"+OPENSEARCH_SEARCH_PIPELINE_NAME,

json=payload,

headers={"Content-Type": "application/json"},

auth=open_search_auth

)Create an OpenSearch Service index for storing the multimodal embeddings and metadata

For this post, we use the Amazon Berkley Objects Dataset, which is a collection of 147,702 product listings with multilingual metadata and 398,212 unique catalog images. In this example, we only use Shoes and listings that are in en_US as shown in section Prepare listings dataset for Amazon OpenSearch ingestion of the notebook.

Use the following code to create an OpenSearch index to ingest the sample data:

response = opensearch_client.indices.create(

index=OPENSEARCH_INDEX_NAME,

body={

"settings": {

"index.knn": True,

"number_of_shards": 2

},

"mappings": {

"properties": {

"amazon_titan_multimodal_embeddings": {

"type": "knn_vector",

"dimension": 1024,

"method": {

"name": "hnsw",

"engine": "lucene",

"parameters": {}

}

}

}

}

}

)Ingest sample data to the OpenSearch Service index

In this step, you select the relevant features used for generating embeddings. The images are converted to Base64. The combination of a selected feature and a Base64 image is used to generate multimodal embeddings, which are stored in the OpenSearch Service index along with the metadata using a OpenSearch bulk operation, and ingest listings in batches.

Create OpenSearch Service query functions to test search functionality

With the sample data ingested, you can run queries against this data to test the hybrid search functionality. To facilitate this process, we created helper functions to perform the queries in the query workflow section of the notebook. In this section, you explore specific parts of the functions that differentiate the search methods.

Keyword search

For keyword search, send the following payload to the OpenSearch domain search endpoint:

payload = {

"query": {

"multi_match": {

"query": query_text,

}

},

}Semantic search

For semantic search, you can send the text and image as part of the payload. Model_id in the request is the external embeddings model that you connected earlier. OpenSearch will invoke the model and convert text and image to embeddings.

payload = {

"query": {

"neural": {

"vector_embedding": {

"query_text": query_text,

"query_image": query_jpg_image,

"model_id": model_id,

"k": 5

}

}

}

}Hybrid search

This method uses the OpenSearch pipeline you created. The payload has both the semantic and neural search.

payload = {

"query": {

"hybrid": {

"queries": [

{

"multi_match": {

"query": query_text,

}

},

{

"neural": {

"vector_embedding": {

"query_text": query_text,

"query_image": query_jpg_image,

"model_id": model_id,

"k": 5

}

}

}

]

}

}

}Test search methods

To compare the multiple search methods, you can query the index using query_text which provides specific information about the desired output, and query_jpg_image which provides the overall abstraction of the desired style of the output.

query_text = "leather sandals in Petal Blush"

search_image_path = '16/16e48774.jpg'

Keyword search

The following output lists the top three keyword search results. The keyword search successfully located leather sandals in the color Petal Blush, but it didn’t take the desired style into consideration.

Semantic search

Semantic search successfully located leather sandal and considered the desired style. However, the similarity to the provided images took priority over the specific color provided in query_text.

Hybrid search

Hybrid search returned similar results to the semantic search because they use the same embeddings model. However, by combining the output of keyword and semantic searches, the ranking of the Petal Blush sandal that most closely matches query_jpg_image increases, moving it the top of the results list.

Clean up

After you complete this walkthrough, clean up all the resources you created as part of this post. This is an important step to make sure you don’t incur any unexpected charges. If you used an existing OpenSearch Service domain, in the Cleanup section of the notebook, we provide suggested cleanup actions, including delete the index, un-deploy the model, delete the model, delete the model group, and delete the Amazon Bedrock connector. If you created an OpenSearch Service domain exclusively for this exercise, you can bypass these actions and delete the domain.

Conclusion

In this post, we explained how to implement multimodal hybrid search by combining keyword and semantic search capabilities using Amazon Bedrock and Amazon OpenSearch Service. We showcased a solution that uses Amazon Titan Multimodal Embeddings G1 to generate embeddings for text and images, enabling users to search using both modalities. The hybrid approach combines the strengths of keyword search and semantic search, delivering accurate and relevant results to customers.

We encourage you to test the notebook in your own account and get firsthand experience with hybrid search variations. In addition to the outputs shown in this post, we provide a few variations in the notebook. If you’re interested in using custom embeddings models in Amazon SageMaker AI instead, see Hybrid Search with Amazon OpenSearch Service. If you want a solution that offers semantic search only, see Build a contextual text and image search engine for product recommendations using Amazon Bedrock and Amazon OpenSearch Serverless and Build multimodal search with Amazon OpenSearch Service.

About the Authors

Renan Bertolazzi is an Enterprise Solutions Architect helping customers realize the potential of cloud computing on AWS. In this role, Renan is a technical leader advising executives and engineers on cloud solutions and strategies designed to innovate, simplify, and deliver results.

Renan Bertolazzi is an Enterprise Solutions Architect helping customers realize the potential of cloud computing on AWS. In this role, Renan is a technical leader advising executives and engineers on cloud solutions and strategies designed to innovate, simplify, and deliver results.

Birender Pal is a Senior Solutions Architect at AWS, where he works with strategic enterprise customers to design scalable, secure and resilient cloud architectures. He supports digital transformation initiatives with a focus on cloud-native modernization, machine learning, and Generative AI. Outside of work, Birender enjoys experimenting with recipes from around the world.

Birender Pal is a Senior Solutions Architect at AWS, where he works with strategic enterprise customers to design scalable, secure and resilient cloud architectures. He supports digital transformation initiatives with a focus on cloud-native modernization, machine learning, and Generative AI. Outside of work, Birender enjoys experimenting with recipes from around the world.

Sarath Krishnan is a Senior Solutions Architect with Amazon Web Services. He is passionate about enabling enterprise customers on their digital transformation journey. Sarath has extensive experience in architecting highly available, scalable, cost-effective, and resilient applications on the cloud. His area of focus includes DevOps, machine learning, MLOps, and generative AI.

Sarath Krishnan is a Senior Solutions Architect with Amazon Web Services. He is passionate about enabling enterprise customers on their digital transformation journey. Sarath has extensive experience in architecting highly available, scalable, cost-effective, and resilient applications on the cloud. His area of focus includes DevOps, machine learning, MLOps, and generative AI.