Large Language Model (LLM) agents have revolutionized how we approach complex, multi-step tasks by combining the reasoning capabilities of foundation models with specialized tools and domain expertise. While single-agent systems using frameworks like ReAct work well for straightforward tasks, real-world challenges often require multiple specialized agents working in coordination. Think about planning a business trip: one agent is needed to research flights based on schedule constraints, another to find accommodations near meeting locations, and a third to coordinate ground transportation—each requiring different tools and domain knowledge. This multi-agent approach introduces a critical architectural challenge: orchestrating the flow of information between agents to ensure reliable, predictable outcomes. Without proper orchestration, agent interactions can become unpredictable, making systems difficult to debug, monitor, and scale in production environments. Agent orchestration addresses this challenge by defining explicit workflows that govern how agents communicate, when they execute, and how their outputs integrate into cohesive solutions. Rather than allowing agents to interact ad hoc, orchestration creates structured pathways that make reasoning transparent and information flow intentional.

Strands Agents is an open-source SDK designed specifically for building orchestrated artificial intelligence (AI) systems. It provides flexible agent abstractions, seamless tool integration, comprehensive observability, and orchestration components like GraphBuilder that enable developers to connect agents into directed workflows with precision and control.

In this post, we explore two powerful orchestration patterns implemented with Strands Agents. Using a common set of travel planning tools, we demonstrate how different orchestration strategies can solve the same problem through distinct reasoning approaches: ReWOO (Reasoning Without Observation), which separates planning, execution, and synthesis into discrete stages, and Reflexion, which implements iterative refinement through structured critique and improvement cycles. These examples will show you how Strands enables precise control over multi-agent workflows, resulting in more reliable, transparent, and maintainable AI systems.

Getting started with Strands Agents

Strands Agents is an open-source framework recently launched by AWS for building production-ready AI agents. It simplifies agent development by abstracting the agent loop into three core components:

- Model Provider: The reasoning engine (like Claude on Amazon Bedrock)

- System Prompt: Instructions that shape the agent’s role and constraints

- Toolbelt: The set of APIs or functions the agent can call

This modular design lets users start with simple single-agent systems and scale up to sophisticated multi-agent architectures. Strands includes built-in support for async operations, session state management, and integrations with multiple providers including Amazon Bedrock, Anthropic, and Mistral. It also integrates seamlessly with AWS services like Lambda, Fargate, and AgentCore.

What makes Strands particularly powerful is its multi-agent orchestration capabilities. Users can compose agents in several ways: use one agent as a tool for another, pass control between agents through handoffs, or coordinate multiple agents working in parallel. The SDK’s GraphBuilder feature lets users connect agents into structured workflows, enabling them to collaborate on complex tasks in a controlled, predictable manner.

For production deployments, Strands provides enterprise-grade observability through OpenTelemetry integration. This provides distributed tracing across an entire agent system, making it easy to debug issues and monitor performance as users scale from prototypes to production workloads.

Fundamentals of Agent Orchestration with Strands

The ReAct pattern is the default approach for most AI agents today. It combines planning, tool invocation, and answer synthesis into a single agent loop. While this works for simple tasks, it creates problems for complex scenarios. The agent might call tools repeatedly without a clear strategy, mix evidence gathering with conclusions, or rush to an answer without verification. These issues become critical in applications requiring structured reasoning, compliance checks, or multi-step validation. This is where orchestration shines.

Instead of one agent doing everything, Strands enables the creation of specialized agents with distinct roles in solving the problem. For example, one agent might plan the approach, another executes the plan, and a third synthesizes the results. Users connect these agents in controlled workflows that match exact requirements. In Strands, orchestration patterns use a graph execution model. Think of it as a flowchart where:

- Each node is a specialized agent

- Edges define how information flows between agents

- The structure makes reasoning steps visible and debuggable

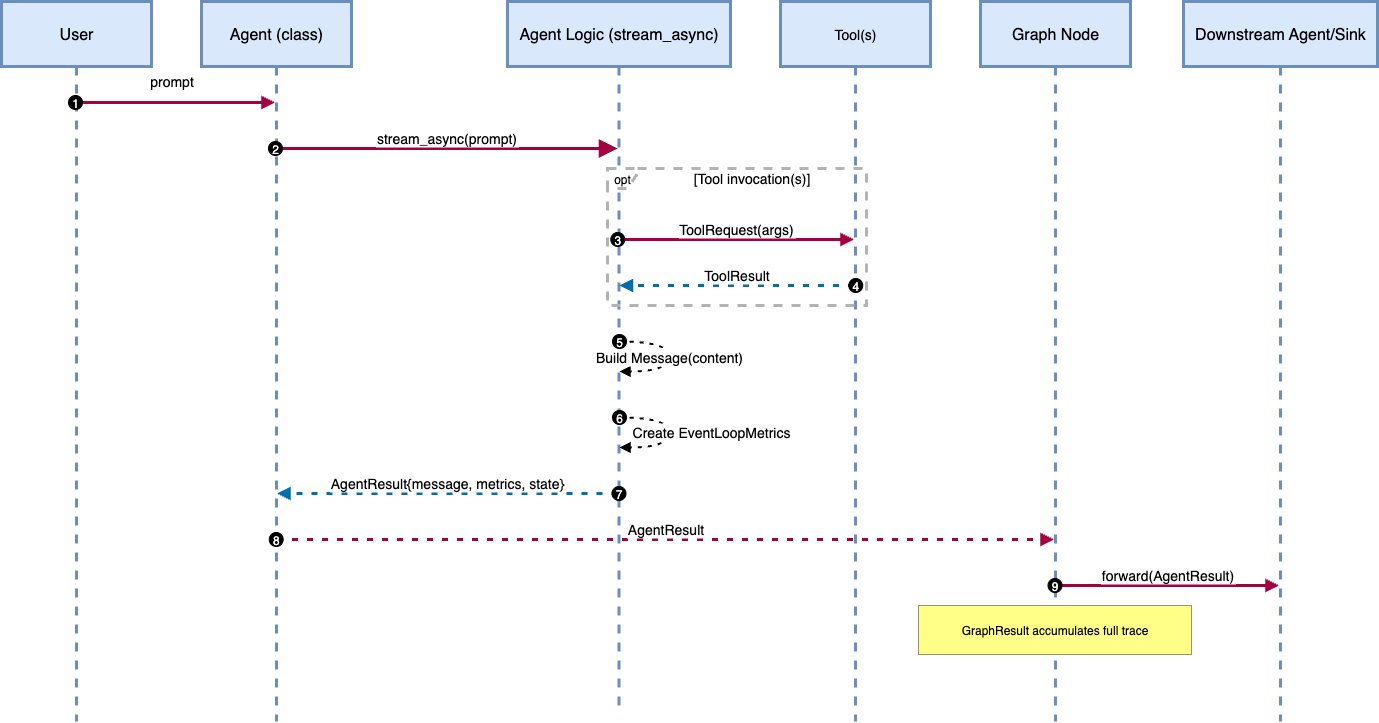

Unlike ReAct’s hidden decision-making, graphs expose every step. Users can trace which agent produced what output, when it became available, and how the next agent used it. This transparency is crucial for building reliable systems. Strands provides four fundamental components for any orchestration pattern:

- Nodes: Agents that encapsulate specific logic or expertise

- Edges: Connections that define execution order and data flow

- AgentResult: Standardized output format from each agent

- GraphResult: Complete execution trace with timing, outputs, and paths taken

The GraphBuilder API lets users wire these components together to define which agents participate, how data flows between them, and where user input enters the system. At runtime, the graph executes deterministically and returns structured results.

Consider a document Q&A pipeline:

The Retriever searches for relevant documents. The Summarizer condenses them. Each agent only sees the data it needs, when it needs it. The flow is explicit, predictable, and easy to debug. This same approach scales to complex patterns. Users can add branches for different reasoning paths, loops for iterative refinement, or parallel execution for exploring multiple strategies. The key is that control is maintained over how information flows through the system.

In the sections that follow, we implement our first pattern: ReWOO, which separates planning from execution to create more reliable agent workflows.

Dataset and default orchestration

Dataset details

We evaluated our system on the τ-Bench airline domain dataset (Yao et al., 2024), which features 300+ flight entries, 500 synthetic user profiles, 2,000+ pre-generated bookings, detailed airline policies, simulated APIs for reservation operations, and 50 structured real-world scenarios. This comprehensive benchmark provides a controlled yet realistic testbed for assessing how agents interpret policies, execute appropriate API calls, and maintain consistency across complex airline operations including upgrades, itinerary changes, and cancellations. While the original dataset presents each task as a multi-turn conversation, we’ve simplified them into single turn queries for this tutorial to better showcase the orchestration patterns.

Architecture at a glance: Default orchestration with ReAct

ReAct (Reasoning + Acting) interleaves two phases inside a single agent loop. The agent reasons in natural language to decide the next step, invokes a tool if needed, observes the tool’s output, and continues reasoning with that observation until it can produce a final answer.

In Strands Agents, the ReAct baseline maps cleanly to a single Agent that owns the τ-Bench airline toolbelt – a list of airline tools(search flights, book/modify/cancel reservations, look up profiles, etc.). The tools are the python functions provided in Tau-Bench dataset, converted to Strands tools using @tool decorator.

- There is no explicit planner or critic; the policy that governs “think → act → observe → think …” lives inside the agent’s prompt and the model’s internal loop. This makes ReAct a natural baseline for tool-augmented systems because it requires minimal orchestration—one agent ‘tool-executor’ with a toolbelt—and it tends to be fast in simple tasks.

Architecture at a glance: ReWOO (Reasoning Without Observations)

ReWOO reframes “how tools are used” rather than “which tools exist.” We keep a single tool-executor for all airline APIs, but we enforce a plan → execute → synthesize separation around it. In Strands, this becomes a small, explicit graph where each node returns a typed result (AgentResult) and the runtime forwards those results downstream in a deterministic way. This leads to governance, observability, and repeatability.

- Planner (plan only). Produces a strictly formatted plan.

- Worker (execute only). Parses the plan, resolves arguments, call tools, and accumulates evidence in a normalized structure. Decoupling execution from planning makes tool use predictable and policy-enforceable (the worker can only run what the plan authorizes).

- Solver (synthesize only). Reads evidence—results from the tools not the tools directly—then composes the final answer. It keeps tool effects and decision-making auditable; avoids “hidden” follow-up calls in the last step.

Constructed with Strands’ GraphBuilder (nodes, edges, entry point), this becomes a deterministic DAG. The runtime hands each downstream node the original task plus the upstream node’s output—captured in AgentResult.

Planner: plan-only agent with a strict grammar

The planner generates a declarative program describing tool usage, not an answer. The following are the important features to design an effective planner prompt:

- Enumerate the allowed set of tool names with arguments

- Few-shot examples to demonstrate the LLM how to plan to answer a given user query.

- Enforce the output shape. We used this:

The plan is returned as an AgentResult. A strict plan is audit-ready and minimizes ambiguity. It also enables static checks (e.g., “only these tools are allowed; one per step”) before anything runs.

Example of a plan created by planner agent for user query:

“My user id is mia_li_3668. I want to fly from New York to Seattle on May 20 (one way). I do not want to fly before 11am EST. I want to fly in economy. I prefer direct flights but one stopover is also fine. If there are multiple options, I prefer the one with the lowest price. I have 3 baggages. I do not want insurance. I want to use my two certificates to pay. If only one certificate can be used, I prefer using the larger one, and pay the rest with my 7447 card.”

Worker: deterministic executor with argument and loop resolution

The worker executes only what the plan authorizes; argument resolution is data-driven. This makes behavior reproducible across runs and model versions.The worker treats the plan as an executable spec.

- Unified plan parser: It parses both regular steps and REPEAT blocks, sorts by evidence ID, and executes them in order.It parses both regular steps and REPEAT blocks, sorts by evidence ID, and executes them in order.

- Evidence ledger : Every step produces a structured record (

#E{id}with description + results). Errors are captured as evidence instead of failing silently.step_evidence[f’#E{eid}’] = { - Context-aware dynamic argument resolution: Build a context from (a) the original task and (b) previous N evidences. Fill placeholders (e.g., airport codes, reservation IDs) from that context—no brittle regex on raw strings. This can be done in couple of different ways. One way is to use a LLM to infer the argument values from the built context. The second method is to use a regex matching to resolve argument values.

- Dynamic tool dispatch with special cases : Tools are invoked directly using

getattr.

Example of an executed step:

Solver: Builds the final answer and presents to the user

Solver combines execution evidence from Worker with the original user query to generate the final response. It receives the structured evidence dictionary and synthesizes it into a natural language answer. The solver never calls tools. It does the following:

- Evidence parsing – Reads the original task and the worker’s evidence , from worker agent node.

- Plan reconstruction — normalizes evidence into a compact, ordered “plan + evidence” text block.

- Final answer generation – Uses LLM with an appropriate prompt to produce the final answer, explicitly addressing constraints and trade-offs.

Separating synthesis from execution yields clear decision logs and stable latency. It also makes it easy to swap synthesis prompts or models without touching planning/execution logic.

Architecture at a glance: Reflexion (Self-Critiquing)

Reflexion is an orchestration pattern where an agent generates a candidate answer and a critique of that answer, then uses the critique to revise the answer in a bounded loop. The goal isn’t to “try again” blindly, but to target revisions based on explicit, machine-parsable feedback (e.g., violated constraints, missing checks, weak rationale). In other words, Reflexion turns model feedback into a structured control signal that governs one or more additional passes, stopping as soon as the answer meets the stated criteria.

Reflexion wraps the existing flight tool-executor with a deliberate draft → critique → (optional) revision loop. Instead of accepting the first output, the system generates a candidate answer, evaluates it against explicit criteria, and only revises when the critique says it should. The motivation is that this method would give higher answer quality. The Reflexion graph has 2 nodes built with GraphBuilder.

- Draft (plan only). Produces an initial answer and initial reflection.

- Revisor (execute only). Loops between improving the query, revising and reflecting on the answer.

Although the orchestration is modeled as a DAG, the revisor node encapsulates up to three revision cycles, invoking tools as needed. Each node returns an AgentResult; the runtime forwards the upstream result to the downstream node and records the full trace in GraphResult.

Draft: Generates initial answer and critique

The draft node uses the same airline tool-executor as used by the other patterns to produce an initial answer. Immediately afterward, it runs a focused “reflection” pass by invoking LLM with a reflection prompt that flags gaps (violated constraints, missing checks, weak rationale) and outputs a compact, labeled payload the revisor can parse deterministically:

Formatted payload after revision:**Answer**: …**Self-Reflection**: …**Needs-Revision**: True|False**User-Query**: …

Revisor: Loops through revision and generation phase

The revisor reads the draft payload, parses the labels (Answer, Self-Reflection, Needs-Revision, User-Query), and decides whether revision is warranted. If so, it improves the original user query using the critique (e.g., “limit to departures ≥ 11:00, ≤ 1 stop, min layover 70m”) and re-invokes the tool-executor to produce a revised answer. It then reflects again using the same labels. This cycle is bounded (e.g., up to 3 passes) and stops as soon as the critique returns Needs Revision:False. The query is improved by a LLM using a specially designed prompt .

Results: Responses from different orchestration patterns

In this section, we look at some examples from the dataset and how each orchestration pattern behaves.

Example 1:

“User Query: I am Lucas Brown (user id is lucas_brown_4047). I need to change the date of my flight reservation EUJUY6 and move it out 2 days due to a family emergency.“

Winner: ReWOO (28s) — policy-aligned refusal without unsafe changes

Summary

-

- ReAct (17s): Fast but incorrect—claims date change + charge on a Basic Economy fare.

- ReWOO (28s): Correct—blocks modification; points to cancel/rebook path.

- Reflexion (60s): Policy-incorrect—acknowledges Basic Economy yet says it can proceed with the change; self-evaluates as “ACCEPT” instead of catching the violation.

Example 2:

User Query: “My user id is mohamed_silva_9265. I want to know the sum of my gift card balances and sum of my certificate balances… Then I want to change my recent reservation to the cheapest business round trip without changing the dates… If basic economy cannot be changed, cancel and book a new one… Use certificates, then gift cards, then Mastercard; tell me how much my Mastercard will be charged.”

Winner: Reflexion (116s) — follows the user’s pre-authorized “cancel → rebook” path, preserves dates, gives totals, and computes the exact Mastercard remainder.

Summary

- ReAct (67s): Detailed search and payment plan but changes a date (May 29) and mutates before a clean confirm; conflicts with user constraint.

- ReWOO (43s): Strong plan, correctly identifies Basic Economy and totals, suggests cancel→ rebook; pricing inconsistent for full return and no final Mastercard figure.

- Reflexion (116s): End-to-end: totals → constraint check → cheapest RT on same dates → cancel (authorized) → compute Mastercard = $1,286. Slowest, but most aligned with the user’s exact instructions.

Example3:

User Query: “My user id is james_taylor_7043. I want to change my upcoming one-stop flight from LAS to IAH to a nonstop flight. My reservation ID is 1N99U6. I also want to remove my checked bag and want the agent to refund me for the same.”

Winner: Reflexion (27s) — offers valid nonstop options and correctly denies bag-removal refund per policy, without premature changes.

Summary

- ReAct (9s): Safe but under-specified—no options surfaced; proposes an action that violates policy.

- ReWOO (25s): Over-eager mutation—updates reservation with two flights and issues refund pre-choice; unnecessary transfer for baggage.

- Reflexion (27s): Policy-aligned and user-centric—presents concrete nonstop choices, explains no bag refund, and waits for selection.

Example 4:

User Query: “I am Anya Garcia (ID: anya_garcia_5901). I booked a flight (3RK2T9) and I want to change the passenger name from Mei Lee to Mei Garcia. Please make this change.“

Winner: ReAct (8s) — correct, minimal path: shows precise update preview and awaits a single yes/no.

Summary

- ReAct (8s): Correct preview → one-tap confirm; fastest and faithful to user intent.

- ReWOO (14s): Good decomposition (identity check + update call) but inconsistent evidence (passenger remained “Lee” in

#E4). - Reflexion (40s): Over-thinks a straightforward edit; no change executed.

When to use which pattern

- ReAct (fast, linear “do the obvious”)

- Use when: A simple, unambiguous update or lookup with 1–2 tool calls and no trade-offs (e.g., rename, toggle, fetch → reply).

- Strength: Lowest latency; minimal planning, however latency might increase if it overcalls tools.

- Watch out: Can skip policy/eligibility checks and mutate unsafely if you’re not careful.

- ReWOO (plan → execute → synthesize with governance)

- Use when: You need ordered dependencies and policy gates before any mutation (e.g., verify fare class, then search, then update).

- Strength: Transparent dataflow; auditable Graph/Agent results; safer by design.

- Watch out (arguments):

- If not using an LLM, argument parsing/validation must be meticulous (types/enums/required).

- If using an LLM for arguments resolution, pass rich context (schemas + examples) to bind correctly—adds latency but improves reliability on complex params.

- Reflexion (analyze options, then act)

- Use when: Multi-constraint decisions, trade-offs, or policy nuances require comparing options (cheapest itinerary under payment rules, etc.).

- Strength: Better at reasoning over alternatives and producing justified choices.

- Watch out: Slower; can over-ask on trivial edits unless reflection is capped.

Architecture at a glance: Hybrid Orchestration — ReWOO-Guided ReAct

Taking into account the pros/cons of ReWOO (governance and auditability), ReAct (speed and flexibility), and Reflexion (quality via critique), we use a hybrid that takes ReWOO’s plan discipline and ReAct’s within-step agility. A ReWOO Planner first emits a strict, step-indexed program (#E1…#En) that names the tools and their order. Execution then switches to a plan-guided ReAct loop that runs inside each step: the agent thinks → validates arguments from prior evidence → calls the authorized tool → observes and (if needed) does one light refinement pass. This preserves global guarantees (no new tools, no reordering, policy gates before mutations) while keeping local flexibility for argument binding and micro-decisions. In Strands, this hybrid maps to a two-node graph:

Planner (ReWOO): Generates the step program only (no tool calls in this node). Output is a typed plan artifact with #E-steps (e.g., get balances → fetch most-recent reservation → search options → compare costs → conditionally mutate).

Plan-Guided ReAct Worker: Consumes the plan and the user task; for each #E step it performs a local ReAct loop but never reorders steps or calls tools not in the plan. It validates arguments, applies policy gates (e.g., Basic Economy ⇒ cancel→ rebook), and synthesizes the final answer. Both planner and executor use the same τ-Bench airline toolbelt (search/book/modify/cancel, user/reservation lookups, math, etc.), exposed as Strands tools.

Local ReAct loop (per step #Ek, bounded):

Compared to vanilla ReAct, the plan provides governance and idempotence—the agent can’t wander or mutate early. Compared to pure ReWOO, the in-step loop handles real-world messiness (argument binding, minor retries) without re-planning. Unlike Reflexion, it avoids multi-pass critique overhead on straightforward tasks while still producing an auditable trace (plan + per-step evidence). In practice, we see it shine on multi-step requests that need ordered checks (e.g., totals → fare rules → search → cancel/rebook → payment split) but benefit from small, local reasoning inside each tool call.

Conclusion

In this post, we showed how custom orchestration on Amazon Strands helps users move beyond a single, monolithic agent and engineer explicit control over reasoning, tool use, and information flow. Using the same τ-Bench airline toolkit, we compared three patterns—ReAct, ReWOO, and Reflexion—under real constraints and observed distinct trade-offs in latency, cost, and answer quality. ReAct remains the lowest-overhead path for simple lookups and single-field updates. ReWOO is the right default when correctness depends on good planning and ordered dependencies: users can stage policy gates before mutations, resolve arguments with richer context, and keep a typed evidence trail for audit. Reflexion adds self-critique to handle multi-constraint choices and payment/itinerary trade-offs, at the cost of extra deliberation. Strands’ graph execution model provides typed handoffs, execution traces, and enforceable tool contracts so users can tune these patterns per use case—tight loops for CRUD, plan→ execute→ synthesize for governed updates, reflect→ revise for option analysis—while bounding side effects and model drift.

To build production agents, treat orchestration as the control plane: pick the pattern that matches your dependency structure and risk profile, then instrument it. Visit this GitHub repo for end-to-end examples, prompts, and runnable graphs.

About the authors

Baishali Chaudhury is an Applied Scientist at the Generative AI Innovation Center at AWS, where she focuses on advancing Generative AI solutions for real-world applications. She has a strong background in computer vision, machine learning, and AI for healthcare. Baishali holds a PhD in Computer Science from University of South Florida and PostDoc from Moffitt Cancer Centre.

Baishali Chaudhury is an Applied Scientist at the Generative AI Innovation Center at AWS, where she focuses on advancing Generative AI solutions for real-world applications. She has a strong background in computer vision, machine learning, and AI for healthcare. Baishali holds a PhD in Computer Science from University of South Florida and PostDoc from Moffitt Cancer Centre.

Rahul Ghosh is an Applied Scientist at Amazon’s Generative AI Innovation Center, where he works with AWS customers across different verticals to expedite their use of Generative AI. Rahul holds a Ph.D. in Computer Science from the University of Minnesota.

Rahul Ghosh is an Applied Scientist at Amazon’s Generative AI Innovation Center, where he works with AWS customers across different verticals to expedite their use of Generative AI. Rahul holds a Ph.D. in Computer Science from the University of Minnesota.

Isaac Privitera is a Principal Data Scientist with the AWS Generative AI Innovation Center, where he develops bespoke generative AI-based solutions to address customers’ business problems. His primary focus lies in building responsible AI systems, using techniques such as RAG, multi-agent systems, and model fine-tuning. When not immersed in the world of AI, Isaac can be found on the golf course, enjoying a football game, or hiking trails with his loyal canine companion, Barry.

Isaac Privitera is a Principal Data Scientist with the AWS Generative AI Innovation Center, where he develops bespoke generative AI-based solutions to address customers’ business problems. His primary focus lies in building responsible AI systems, using techniques such as RAG, multi-agent systems, and model fine-tuning. When not immersed in the world of AI, Isaac can be found on the golf course, enjoying a football game, or hiking trails with his loyal canine companion, Barry.