Picture this: Your enterprise has just deployed its first generative AI application. The initial results are promising, but as you plan to scale across departments, critical questions emerge. How will you enforce consistent security, prevent model bias, and maintain control as AI applications multiply?

It turns out you’re not alone. A McKinsey survey spanning 750+ leaders across 38 countries reveals both challenges and opportunities when building a governance strategy. While organizations are committing significant resources—most planning to invest over $1 million in responsible AI—implementation hurdles persist. Knowledge gaps represent the primary barrier for over 50% of respondents, with 40% citing regulatory uncertainty.

Yet companies with established responsible AI programs report substantial benefits: 42% see improved business efficiency, while 34% experience increased consumer trust. These results point to why robust risk management is fundamental to realizing AI’s full potential.

Responsible AI: A non-negotiable from day one

At the AWS Generative AI Innovation Center, we’ve observed that organizations achieving the strongest results embed governance into their DNA from the start. This aligns with the AWS commitment to responsible AI development, evidenced by our recent launch of the AWS Well-Architected Responsible AI Lens, a comprehensive framework for implementing responsible practices throughout the development lifecycle.

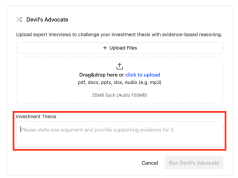

The Innovation Center has consistently applied these principles by embracing a responsible by design philosophy, carefully scoping use cases, and following science-backed guidance. This approach led to our AI Risk Intelligence (AIRI) solution, which transforms these best practices into actionable, automated governance controls—making responsible AI implementation both attainable and scalable.

Four tips for responsible and secure generative AI deployments

Drawing from our experience helping more than one thousand organizations across industries and geographies, here are key strategies for integrating robust governance and security controls into the development, review, and deployment of AI applications through an automated and seamless process.

1 – Adopt a governance-by-design mindset

At the Innovation Center, we work daily with organizations at the forefront of generative and agentic AI adoption. We’ve observed a consistent pattern: while the promise of generative AI captivates business leaders, they often struggle to chart a path toward responsible and secure implementation. The organizations achieving the most impressive results establish a governance-by-design mindset from the start—treating AI risk management and responsible AI considerations as foundational elements rather than compliance checkboxes. This approach transforms governance from a perceived barrier into a strategic advantage for faster innovation while maintaining appropriate controls. By embedding governance into the development process itself, these organizations can scale their AI initiatives more confidently and securely.

2 – Align technology, business, and governance

The primary mission of the Innovation Center is helping customers develop and deploy AI solutions to meet business needs, while leveraging the most optimal AWS services. However, technical exploration must go hand-in-hand with governance planning. Think of it like conducting an orchestra—you wouldn’t coordinate a symphony without understanding how each instrument works and how they harmonize together. Similarly, effective AI governance requires a deep understanding of the underlying technology before implementing controls. We help organizations establish clear connections between technology capabilities, business objectives, and governance requirements from the start, making sure these three elements work in concert.

3 – Embed security as the governance gateway

After establishing a governance-by-design mindset and aligning business, technology, and governance objectives, the next crucial step is implementation. We’ve found that security serves as the most effective entry point for operationalizing comprehensive AI governance. Security not only provides vital protection but also supports responsible innovation by building trust into the foundation of AI systems. The approach used by the Innovation Center emphasizes security-by-design throughout the implementation journey, from basic infrastructure protection to sophisticated threat detection in complex workflows.

To support this approach, we help customers leverage capabilities like the AWS Security Agent, which automates security validation across the development lifecycle. This frontier agent conducts customized security reviews and penetration testing based on centrally defined standards, helping organizations scale their security expertise to match development velocity.

This security-first approach anchors a broader set of governance controls. The AWS Responsible AI framework unites fairness, explainability, privacy and security, safety, controllability, veracity and robustness, governance, and transparency into a cohesive approach. As AI systems integrate deeper into business processes and autonomous decision-making, automating these controls while maintaining rigorous oversight becomes crucial for scaling successfully.

4 – Automate governance at enterprise scale

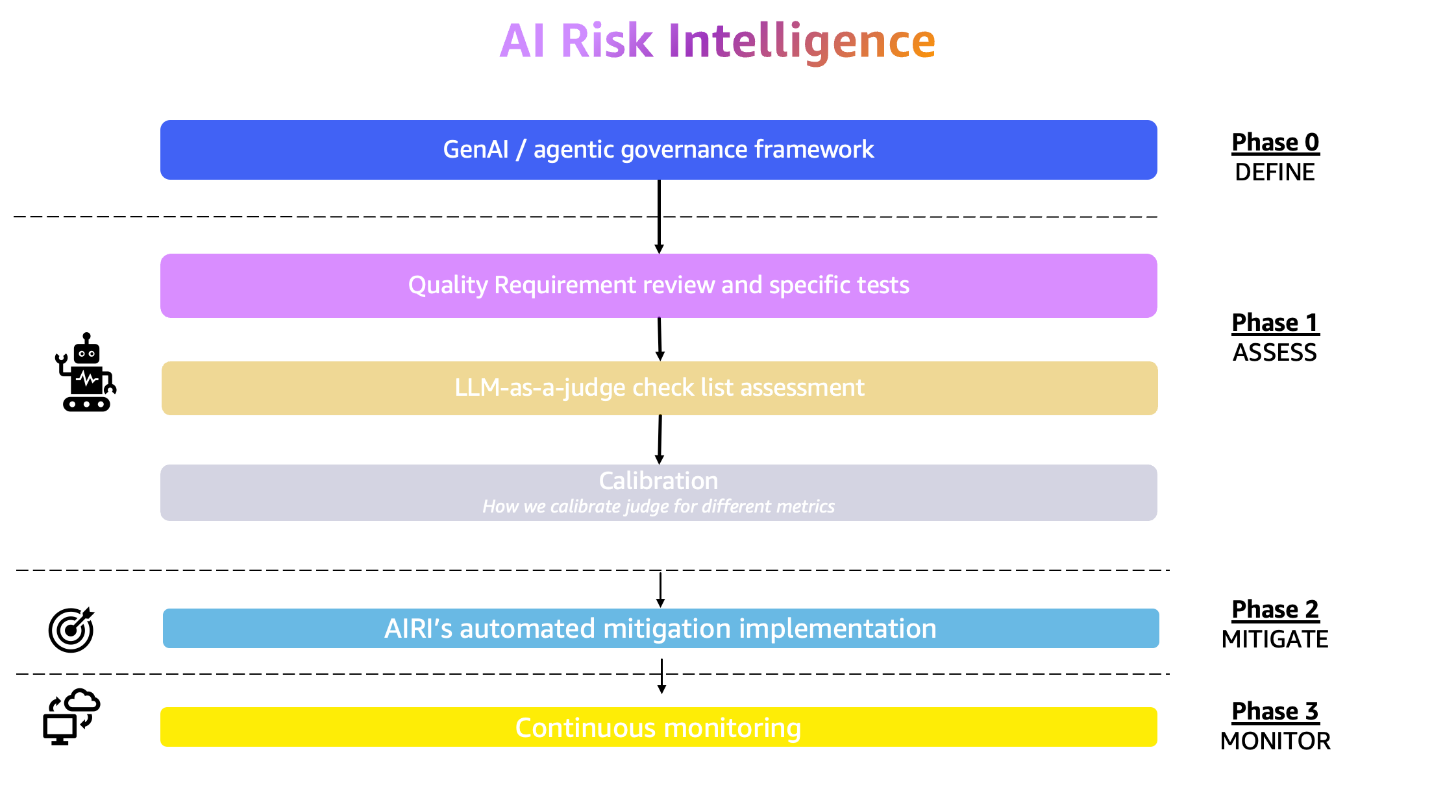

With the foundational elements in place—mindset, alignment, and security controls—organizations need a way to systematically scale their governance efforts. This is where the AIRI solution comes in. Rather than creating new processes, it operationalizes the principles and controls we’ve discussed through automation, in a phased approach.

The solution’s architecture integrates seamlessly with existing workflows through a three-step process: user input, automated assessment, and actionable insights. It analyzes everything from source code to system documentation, using advanced techniques like automated document processing and LLM-based evaluations to conduct comprehensive risk assessments. Most importantly, it performs dynamic testing of generative AI systems, checking for semantic consistency and potential vulnerabilities while adapting to each organization’s specific requirements and industry standards.

From theory to practice

The true measure of effective AI governance is how it evolves with an organization while maintaining rigorous standards at scale. When implemented successfully, automated governance enables teams to focus on innovation, confident that their AI systems operate within appropriate guardrails. A compelling example comes from our collaboration with Ryanair, Europe’s largest airline group. As they scale towards 300 million passengers by 2034, Ryanair needed responsible AI governance for their cabin crew application, which provides frontline staff with crucial operational information. Using Amazon Bedrock, the Innovation Center conducted an AI-powered evaluation. This established transparent, data-driven risk management where risks were previously difficult to quantify—creating a model for responsible AI governance that Ryanair can now expand across their AI portfolio.

This implementation demonstrates the broader impact of systematic AI governance. Organizations using this framework consistently report accelerated paths to production, reduced manual work, and enhanced risk management capabilities. Most importantly, they’ve achieved strong cross-functional alignment, from technology to legal to security teams—all working from clear, measurable objectives.

A foundation for innovation

Responsible AI governance isn’t a constraint—it’s a catalyst. By embedding governance into the fabric of AI development, organizations can innovate with confidence, knowing they have the controls to scale securely and responsibly. The example above demonstrates how automated governance transforms theoretical frameworks into practical solutions that drive business value while maintaining trust.

Learn more about the AWS Generative AI Innovation Center and how we’re helping organizations of different sizes implement responsible AI to complement their business objectives.

About the Authors

Segolene Dessertine-Panhard is the global tech lead for Responsible AI and AI governance initiatives at the AWS Generative AI Innovation Center. In this role, she supports AWS customers in scaling their generative AI strategies by implementing robust governance processes and effective AI and cybersecurity risk management systems, leveraging AWS capabilities and state-of-the-art scientific models. Prior to joining AWS in 2018, she was a full-time professor of Finance at New York University’s Tandon School of Engineering. She also served for several years as an independent consultant in financial disputes and regulatory investigations. She holds a Ph.D. from Paris Sorbonne University.

Segolene Dessertine-Panhard is the global tech lead for Responsible AI and AI governance initiatives at the AWS Generative AI Innovation Center. In this role, she supports AWS customers in scaling their generative AI strategies by implementing robust governance processes and effective AI and cybersecurity risk management systems, leveraging AWS capabilities and state-of-the-art scientific models. Prior to joining AWS in 2018, she was a full-time professor of Finance at New York University’s Tandon School of Engineering. She also served for several years as an independent consultant in financial disputes and regulatory investigations. She holds a Ph.D. from Paris Sorbonne University.

Sri Elaprolu serves as Director of the AWS Generative AI Innovation Center, where he leverages nearly three decades of technology leadership experience to drive artificial intelligence and machine learning innovation. In this role, he leads a global team of machine learning scientists and engineers who develop and deploy advanced generative and agentic AI solutions for enterprise and government organizations facing complex business challenges. Throughout his nearly 13-year tenure at AWS, Sri has held progressively senior positions, including leadership of ML science teams that partnered with high-profile organizations such as the NFL, Cerner, and NASA. These collaborations enabled AWS customers to harness AI and ML technologies for transformative business and operational outcomes. Prior to joining AWS, he spent 14 years at Northrop Grumman, where he successfully managed product development and software engineering teams. Sri holds a Master’s degree in Engineering Science and an MBA with a concentration in general management, providing him with both the technical depth and business acumen essential for his current leadership role.

Sri Elaprolu serves as Director of the AWS Generative AI Innovation Center, where he leverages nearly three decades of technology leadership experience to drive artificial intelligence and machine learning innovation. In this role, he leads a global team of machine learning scientists and engineers who develop and deploy advanced generative and agentic AI solutions for enterprise and government organizations facing complex business challenges. Throughout his nearly 13-year tenure at AWS, Sri has held progressively senior positions, including leadership of ML science teams that partnered with high-profile organizations such as the NFL, Cerner, and NASA. These collaborations enabled AWS customers to harness AI and ML technologies for transformative business and operational outcomes. Prior to joining AWS, he spent 14 years at Northrop Grumman, where he successfully managed product development and software engineering teams. Sri holds a Master’s degree in Engineering Science and an MBA with a concentration in general management, providing him with both the technical depth and business acumen essential for his current leadership role.

Randi Larson connects AI innovation with executive strategy for the AWS Generative AI Innovation Center, shaping how organizations understand and translate technical breakthroughs into business value. She hosts the Innovation Center’s podcast series and combines strategic storytelling with data-driven insight through global keynotes and executive interviews on AI transformation. Before Amazon, Randi refined her analytical precision as a Bloomberg journalist and consultant to economic institutions, think tanks, and family offices on financial technology initiatives. Randi holds an MBA from Duke University’s Fuqua School of Business and a B.S. in Journalism and Spanish from Boston University.

Randi Larson connects AI innovation with executive strategy for the AWS Generative AI Innovation Center, shaping how organizations understand and translate technical breakthroughs into business value. She hosts the Innovation Center’s podcast series and combines strategic storytelling with data-driven insight through global keynotes and executive interviews on AI transformation. Before Amazon, Randi refined her analytical precision as a Bloomberg journalist and consultant to economic institutions, think tanks, and family offices on financial technology initiatives. Randi holds an MBA from Duke University’s Fuqua School of Business and a B.S. in Journalism and Spanish from Boston University.