Businesses are increasingly seeking domain-adapted and specialized foundation models (FMs) to meet specific needs in areas such as document summarization, industry-specific adaptations, and technical code generation and advisory. The increased usage of generative AI models has offered tailored experiences with minimal technical expertise, and organizations are increasingly using these powerful models to drive innovation and enhance their services across various domains, from natural language processing (NLP) to content generation.

However, using generative AI models in enterprise environments presents unique challenges. Out-of-the-box models often lack the specific knowledge required for certain domains or organizational terminologies. To address this, businesses are turning to custom fine-tuned models, also known as domain-specific large language models (LLMs). These models are tailored to perform specialized tasks within specific domains or micro-domains. Similarly, organizations are fine-tuning generative AI models for domains such as finance, sales, marketing, travel, IT, human resources (HR), procurement, healthcare and life sciences, and customer service. Independent software vendors (ISVs) are also building secure, managed, multi-tenant generative AI platforms.

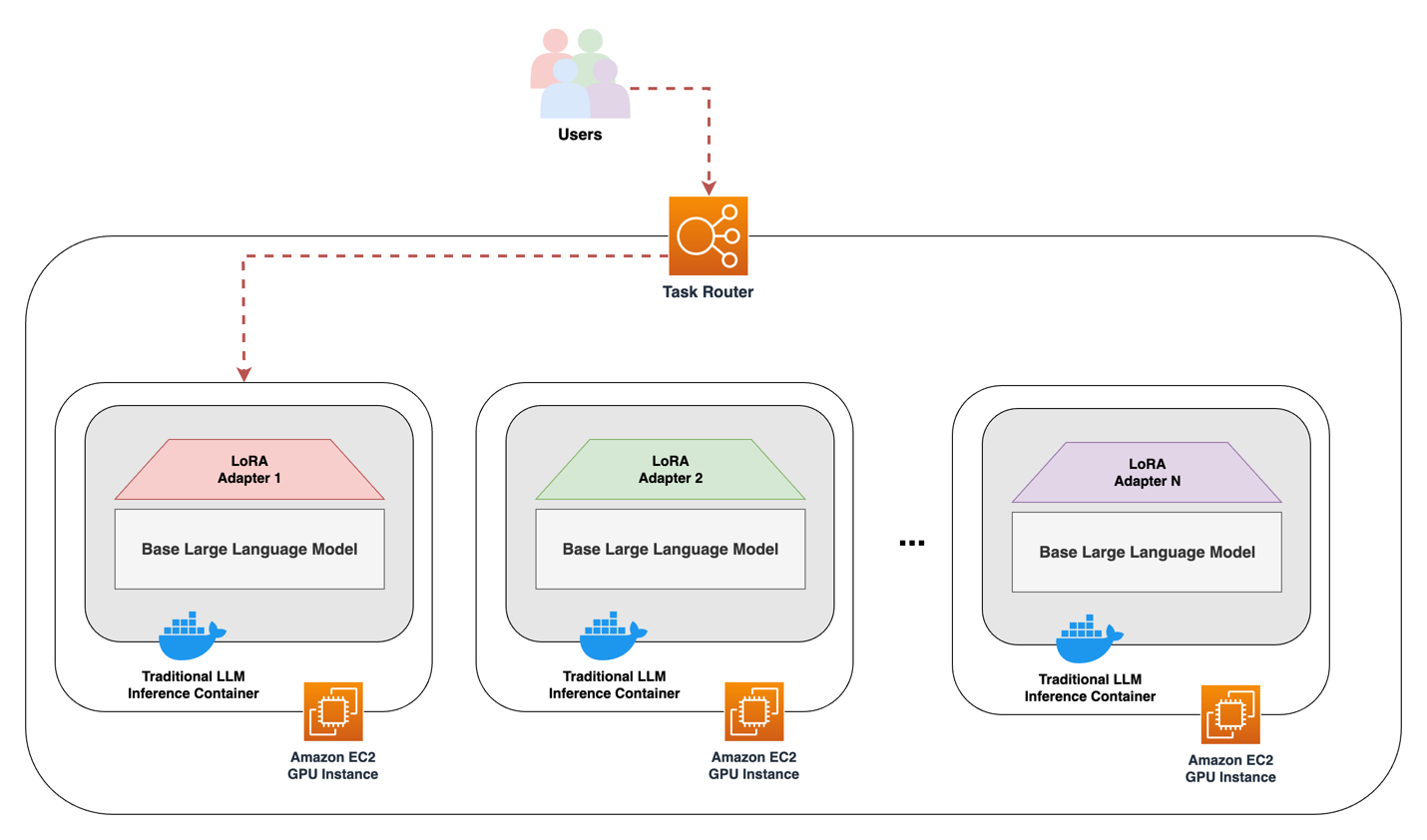

As the demand for personalized and specialized AI solutions grows, businesses face the challenge of efficiently managing and serving a multitude of fine-tuned models across diverse use cases and customer segments. From résumé parsing and job skill matching to domain-specific email generation and natural language understanding, companies often grapple with managing hundreds of fine-tuned models tailored to specific needs. This challenge is further compounded by concerns over scalability and cost-effectiveness. Traditional model serving approaches can become unwieldy and resource-intensive, leading to increased infrastructure costs, operational overhead, and potential performance bottlenecks, due to the size and hardware requirements to maintain a high-performing FM. The following diagram represents a traditional approach to serving multiple LLMs.

Fine-tuning LLMs is prohibitively expensive due to the hardware requirements and the costs associated with hosting separate instances for different tasks.

In this post, we explore how Low-Rank Adaptation (LoRA) can be used to address these challenges effectively. Specifically, we discuss using LoRA serving with LoRA eXchange (LoRAX) and Amazon Elastic Compute Cloud (Amazon EC2) GPU instances, allowing organizations to efficiently manage and serve their growing portfolio of fine-tuned models, optimize costs, and provide seamless performance for their customers.

LoRA is a technique for efficiently adapting large pre-trained language models to new tasks or domains by introducing small trainable weight matrices, called adapters, within each linear layer of the pre-trained model. This approach enables efficient adaptation with a significantly reduced number of trainable parameters compared to full model fine-tuning. Although LoRA allows for efficient adaptation, typical hosting of fine-tuned models merges the fine-tuned layers and base model weights together, so organizations with multiple fine-tuned variants normally must host each on separate instances. Because the resultant adapters are relatively small compared to the base model and are the last few layers of inference, this traditional custom model-serving approach is inefficient toward both resource and cost optimization.

A solution for this is provided by an open source software tool called LoRAX that provides weight-swapping mechanisms for inference toward serving multiple variants of a base FM. LoRAX takes away having to manually set up the adapter attaching and detaching process with the pre-trained FM while you’re swapping between inferencing fine-tuned models for different domain or instruction use cases.

With LoRAX, you can fine-tune a base FM for a variety of tasks, including SQL query generation, industry domain adaptations, entity extraction, and instruction responses. They can host the different variants on a single EC2 instance instead of a fleet of model endpoints, saving costs without impacting performance.

Why LoRAX for LoRA deployment on AWS?

The surge in popularity of fine-tuning LLMs has given rise to multiple inference container methods for deploying LoRA adapters on AWS. Two prominent approaches among our customers are LoRAX and vLLM.

vLLM offers rapid inference speeds and high-performance capabilities, making it well-suited for applications that demand heavy-serving throughput at low cost, making it a perfect fit especially when running multiple fine-tuned models with the same base model. You can run vLLM inference containers using Amazon SageMaker, as demonstrated in Efficient and cost-effective multi-tenant LoRA serving with Amazon SageMaker in the AWS Machine Learning Blog. However, the complexity of vLLM currently limits ease of implementing custom integrations for applications. vLLM also has limited quantization support.

For those seeking methods to build applications with strong community support and custom integrations, LoRAX presents an alternative. LoRAX is built upon Hugging Face’s Text Generation Interface (TGI) container, which is optimized for memory and resource efficiency when working with transformer-based models. Furthermore, LoRAX supports quantization methods such as Activation-aware Weight Quantization (AWQ) and Half-Quadratic Quantization (HQQ)

Solution overview

The LoRAX inference container can be deployed on a single EC2 G6 instance, and models and adapters can be loaded in using Amazon Simple Storage Service (Amazon S3) or Hugging Face. The following diagram is the solution architecture.

Prerequisites

For this guide, you need access to the following prerequisites:

- An AWS account

- Proper permissions to deploy EC2 G6 instances. LoRAX is built with the intention of using NVIDIA CUDA technology, and the G6 family of EC2 instances is the most cost-efficient instance types with the more recent NVIDIA CUDA accelerators. Specifically, the G6.xlarge is the most cost-efficient for the purposes of this tutorial, at the time of this writing. Make sure that quota increases are active prior to deployment.

- (Optional) A Jupyter notebook within Amazon SageMaker Studio or SageMaker Notebook Instances. After your requested quotas are applied to your account, you can use the default Studio Python 3 (Data Science) image with an ml.t3.medium instance to run the optional notebook code snippets. For the full list of available kernels, refer to available Amazon SageMaker kernels.

Walkthrough

This post walks you through creating an EC2 instance, downloading and deploying the container image, and hosting a pre-trained language model and custom adapters from Amazon S3. Follow the prerequisite checklist to make sure that you can properly implement this solution.

Configure server details

In this section, we show how to configure and create an EC2 instance to host the LLM. This guide uses the EC2 G6 instance class, and we deploy a 15 GB Llama2 7B model. It’s recommended to have about 1.5x the GPU memory capacity of the model to swiftly run inference on a language model. GPU memory specifications can be found at Amazon ECS task definitions for GPU workloads.

You have the option to quantize the model. Quantizing a language model reduces the model weights to a size of your choosing. For example, the LLM we use is Meta’s Llama2 7b, which by default has a weight size of fp16, or 16-bit floating point. We can convert the model weights to int8 or int4 (8- or 4-bit integers) to shrink the memory footprint of the model by 50% and 25% respectively. In this guide, we use the default fp16 representation of Meta’s Llama2 7B, so we require an instance type with at least 22 GB of GPU memory, or VRAM.

Depending on the language model specifications, we need to adjust the amount of Amazon Elastic Block Store (Amazon EBS) storage to properly store the base model and adapter weights.

To set up your inference server, follow these steps:

- On the Amazon EC2 console, choose Launch instances, as shown in the following screenshot.

- For Name, enter

LoRAX - Inference Server. - To open AWS CloudShell, on the bottom left of the AWS Management Console choose CloudShell, as shown in the following screenshot.

- Paste the following command into CloudShell and copy the resulting text, as shown in the screenshot that follows. This is the Amazon Machine Image (AMI) ID you will use.

- In the Application and OS Images (Amazon Machine Image) search bar, enter

ami-0d2047d61ff42e139and press Enter on your keyboard.

- In Selected AMI, enter the AMI ID that you got from the CloudShell command. In Community AMIs, search for the Deep Learning OSS Nvidia Driver AMI GPU PyTorch 2.5.1 (Ubuntu 22.04) AMI.

- Choose Select, as shown in the following screenshot.

- Specify the Instance type as

g6.xlarge. Depending on the size of the model, you can increase the size of the instance to accommodate your For information on GPU memory per instance type, visit Amazon EC2 task definitions for GPU workloads.

- (Optional) Under Key pair (login), create a new key pair or select an existing key pair if you want to use one to connect to the instance using Secure Shell (SSH).

- In Network settings, choose Edit, as shown in the following screenshot.

- Leave default settings for VPC, Subnet, and Auto-assign public IP.

- Under Firewall (security groups), for Security group name, enter

Inference Server Security Group. - For Description, enter

Security Group for Inference Server. - Under Inbound Security Group Rules, edit Security group rule 1 to limit SSH traffic to your IP address by changing Source type to My IP.

- Choose Add security group rule.

- Configure Security group rule 2 by changing Type to All ICMP-IPv4 and Source Type to My IP. This is to make sure the server is only reachable to your IP address and not bad actors.

- Under Configure storage, set Root volume size to

128 GiBto allow enough space for storing base model and adapter weights. For larger models and more adapters, you might need to increase this value accordingly. The model card available with most open source models details the size of the model weights and other usage information. We suggest 128 GB for the starting storage size here because downloading multiple adapters along with the model weights can add up very quickly. Factoring the operating system space, downloaded drivers and dependencies, and various project files, 128 GB is a safer storage size to start off with before adjusting up or down. After setting the desired storage space, select the Advanced details dropdown menu.

- Under IAM instance profile, either select or create an IAM instance profile that has S3 read access enabled.

- Choose Launch instance.

- When the instance finishes launching, select either SSH or Instance connect to connect to your instance and enter the following commands:

Install container and launch server

The server is now properly configured to load and run the serving software.

Enter the following commands to download and deploy the LoRAX Docker container image. For more information, refer to Run container with base LLM. Specify a model from Hugging Face or the storage volume and load the model for inference. Replace the parameters in the commands to suit your requirements (for example, <huggingface-access-token>).

Adding the -d tag as shown will run the download and installation process in the background. It can take up to 30 minutes until properly configured. Using the docker commands docker ps and docker logs <container-name>, you can view the progress of the Docker container and observe when the container is finished setting up. docker logs <container-name> --follow will continue streaming the new output from the container for continuous monitoring.

Test server and adapters

By running the container as a background process using the -d tag, you can prompt the server with incoming requests. By specifying the model-id as a Hugging Face model ID, LoRAX loads the model into memory directly from Hugging Face.

This isn’t recommended for production because relying on Hugging Face introduces yet another point of failure in case the model or adapter is unavailable. It’s recommended that models be stored locally either in Amazon S3, Amazon EBS, or Amazon Elastic File System (Amazon EFS) for consistent deployments. Later in this post, we discuss a way to load models and adapters from Amazon S3 as you go.

LoRAX also can pull adapter files from Hugging Face at runtime. You can use this capability by adding adapter_id and adapter_source within the body of the request. The first time a new adapter is requested, it can take some time to load into the server, but requests afterwards will load from memory.

- Enter the following command to prompt the base model:

- Enter the following command to prompt the base model with the specified adapter:

[Optional] Create custom adapters with SageMaker training and PEFT

Typical fine-tuning jobs for LLMs merge the adapter weights with the original base model, but using software such as Hugging Face’s PEFT library allows for fine-tuning with adapter separation.

Follow the steps outlined in this AWS Machine Learning blog post to fine-tune Meta’s Llama 2 and get the separated LoRA adapter in Amazon S3.

[Optional] Use adapters from Amazon S3

LoRAX can pull adapter files from Amazon S3 at runtime. You can use this capability by adding adapter_id and adapter_source within the body of the request. The first time a new adapter is requested, it can take some time to load into the server, but requests afterwards will load from server memory. This is the optimal method when running LoRAX in production environments compared to importing from Hugging Face because it doesn’t involve runtime dependencies.

[Optional] Use custom models from Amazon S3

LoRAX also can load custom language models from Amazon S3. If the model architecture is supported in the LoRAX documentation, you can specify a bucket name to pull the weights from, as shown in the following code example. Refer to the previous optional section on separating adapter weights from base model weights to customize your own language model.

Reliable deployments using Amazon S3 for model and adapter storage

Storing models and adapters in Amazon S3 offers a more dependable solution for consistent deployments compared to relying on third-party services such as Hugging Face. By managing your own storage, you can implement robust protocols so your models and adapters remain accessible when needed. Additionally, you can use this approach to maintain version control and isolate your assets from external sources, which is crucial for regulatory compliance and governance.

For even greater flexibility, you can use virtual file systems such as Amazon EFS or Amazon FSx for Lustre. You can use these services to mount the same models and adapters across multiple instances, facilitating seamless access in environments with auto scaling setups. This means that all instances, whether scaling up or down, have uninterrupted access to the necessary resources, enhancing the overall reliability and scalability of your deployments.

Cost comparison and advisory on scaling

Using the LoRAX inference containers on EC2 instances means that you can drastically reduce the costs of hosting multiple fine-tuned versions of language models by storing all adapters in memory and swapping dynamically at runtime. Because LLM adapters are typically a fraction of the size of the base model, you can efficiently scale your infrastructure according to server usage and not by individual variant utilization. LoRA adapters are usually anywhere from 1/10th to 1/4th the size of the base model. But, again, it depends on the implementation and complexity of the task that the adapter is being trained on or for. Regular adapters can be as large as the base model.

In the preceding example, the model adapters resultant from the training methods were 5 MB.

Though this storage amount depends on the specific model architecture, you can dynamically swap up to thousands of fine-tuned variants on a single instance with little to no change to inference speed. It’s recommended to use instances with around 150% GPU memory to model and variant size to account for model, adapter, and KV cache (or attention cache) storage in VRAM. For GPU memory specifications, refer to Amazon ECS task definitions for GPU workloads.

Depending on the chosen base model and the number of fine-tuned adapters, you can train and deploy hundreds or thousands of customized language models sharing the same base model using LoRAX to dynamically swap out adapters. With adapter swapping mechanisms, if you have five fine-tuned variants, you can save 80% on hosting costs because all the custom adapters can be used in the same instance.

Launch templates in Amazon EC2 can be used to deploy multiple instances, with options for load balancing or auto scaling. You can additionally use AWS Systems Manager to deploy patches or changes. As discussed previously, a shared file system can be used across all deployed EC2 resources to store the LLM weights for multiple adapters, resulting in faster translation to the instances compared to Amazon S3. The difference between using a shared file system such as Amazon EFS over direct Amazon S3 access is the number of steps to load the model weights and adapters into memory. With Amazon S3, the adapter and weights need to be transferred to the local file system of the instance before being loaded. However, shared file systems don’t need to transfer the file locally and can be loaded directly. There are implementation tradeoffs that should be taken into consideration. You can also use Amazon API Gateway as an API endpoint for REST-based applications.

Host LoRAX servers for multiple models in production

If you intend to use multiple custom FMs for specific tasks with LoRAX, follow this guide for hosting multiple variants of models. Follow this AWS blog on hosting text classification with BERT to perform task routing between the expert models. For an example implementation of efficient model hosting using adapter swapping, refer to LoRA Land, which was released by Predibase, the organization responsible for LoRAX. LoRA Land is a collection of 25 fine-tuned variants of Mistral.ai’s Mistral-7b LLM that collectively outperforms top-performing LLMs hosted behind a single endpoint. The following diagram is the architecture.

Cleanup

In this guide, we created security groups, an S3 bucket, an optional SageMaker notebook instance, and an EC2 inference server. It’s important to terminate resources created during this walkthrough to avoid incurring additional costs:

- Delete the S3 bucket

- Terminate the EC2 inference server

- Terminate the SageMaker notebook instance

Conclusion

After following this guide, you can set up an EC2 instance with LoRAX for language model hosting and serving, storing and accessing custom model weights and adapters in Amazon S3, and manage pre-trained and custom models and variants using SageMaker. LoRAX allows for a cost-efficient approach for those who want to host multiple language models at scale. For more information on working with generative AI on AWS, refer to Announcing New Tools for Building with Generative AI on AWS.

About the Authors

John Kitaoka is a Solutions Architect at Amazon Web Services, working with government entities, universities, nonprofits, and other public sector organizations to design and scale artificial intelligence solutions. With a background in mathematics and computer science, John’s work covers a broad range of ML use cases, with a primary interest in inference, AI responsibility, and security. In his spare time, he loves woodworking and snowboarding.

John Kitaoka is a Solutions Architect at Amazon Web Services, working with government entities, universities, nonprofits, and other public sector organizations to design and scale artificial intelligence solutions. With a background in mathematics and computer science, John’s work covers a broad range of ML use cases, with a primary interest in inference, AI responsibility, and security. In his spare time, he loves woodworking and snowboarding.

Varun Jasti is a Solutions Architect at Amazon Web Services, working with AWS Partners to design and scale artificial intelligence solutions for public sector use cases to meet compliance standards. With a background in Computer Science, his work covers broad range of ML use cases primarily focusing on LLM training/inferencing and computer vision. In his spare time, he loves playing tennis and swimming.

Varun Jasti is a Solutions Architect at Amazon Web Services, working with AWS Partners to design and scale artificial intelligence solutions for public sector use cases to meet compliance standards. With a background in Computer Science, his work covers broad range of ML use cases primarily focusing on LLM training/inferencing and computer vision. In his spare time, he loves playing tennis and swimming.

Baladithya Balamurugan is a Solutions Architect at AWS focused on ML deployments for inference and utilizing AWS Neuron to accelerate training and inference. He works with customers to enable and accelerate their ML deployments on services such as AWS Sagemaker and AWS EC2. Based out of San Francisco, Baladithya enjoys tinkering, developing applications and his homelab in his free time.

Baladithya Balamurugan is a Solutions Architect at AWS focused on ML deployments for inference and utilizing AWS Neuron to accelerate training and inference. He works with customers to enable and accelerate their ML deployments on services such as AWS Sagemaker and AWS EC2. Based out of San Francisco, Baladithya enjoys tinkering, developing applications and his homelab in his free time.