At Amazon, our team builds Rufus, a generative AI-powered shopping assistant that serves millions of customers at immense scale. However, deploying Rufus at scale introduces significant challenges that must be carefully navigated. Rufus is powered by a custom-built large language model (LLM). As the model’s complexity increased, we prioritized developing scalable multi-node inference capabilities that maintain high-quality interactions while delivering low latency and cost-efficiency.

In this post, we share how we developed a multi-node inference solution using Amazon Trainium and vLLM, an open source library designed for efficient and high-throughput serving of LLMs. We also discuss how we built a management layer on top of Amazon Elastic Container Service (Amazon ECS) to host models across multiple nodes, facilitating robust, reliable, and scalable deployments.

Challenges with multi-node inference

As our Rufus model grew bigger in size, we needed multiple accelerator instances because no single chip or instance had enough memory for the entire model. We first needed to engineer our model to be split across multiple accelerators. Techniques such as tensor parallelism can be used to accomplish this, which can also impact various metrics such as time to first token. At larger scale, the accelerators on a node might not be enough and require you to use multiple hosts or nodes. At that point, you must also address managing your nodes as well as how your model is sharded across them (and their respective accelerators). We needed to address two major areas:

- Model performance – Maximize compute and memory resources utilization across multiple nodes to serve models at high throughput, without sacrificing low latency. This includes designing effective parallelism strategies and model weight-sharding approaches to partition computation and memory footprint both within the same node and across multiple nodes, and an efficient batching mechanism that maximizes hardware resource utilization under dynamic request patterns.

- Multi-node inference infrastructure – Design a containerized, multi-node inference abstraction that represents a single model running across multiple nodes. This abstraction and underlying infrastructure needs to support fast inter-node communication, maintain consistency across distributed components, and allow for deployment and scaling as a single, deployable unit. In addition, it must support continuous integration to allow rapid iteration and safe, reliable rollouts in production environments.

Solution overview

Taking these requirements into account, we built multi-node inference solution designed to overcome the scalability, performance, and reliability challenges inherent in serving LLMs at production scale using tens of thousands of TRN1 instances.

To create a multi-node inference infrastructure, we implemented a leader/follower multi-node inference architecture in vLLM. In this configuration, the leader node uses vLLM for request scheduling, batching, and orchestration, and follower nodes execute distributed model computations. Both leader and follower nodes share the same NeuronWorker implementation in vLLM, providing a consistent model execution path through seamless integration with the AWS Neuron SDK.

To address how we split the model across multiple instances and accelerators, we used hybrid parallelism strategies supported in the Neuron SDK. Hybrid parallelism strategies such as tensor parallelism and data parallelism are selectively applied to maximize cross-node compute and memory bandwidth utilization, significantly improving overall throughput.

Being aware of how the nodes are connected is also important to avoid latency penalties. We took advantage of network topology-aware node placement. Optimized placement facilitates low-latency, high-bandwidth cross-node communication using Elastic Fabric Adapter (EFA), minimizing communication overhead and improving collective operation efficiency.

Lastly, to manage models across multiple nodes, we built a multi-node inference unit abstraction layer on Amazon ECS. This abstraction layer supports deploying and scaling multiple nodes as a single, cohesive unit, providing robust and reliable large-scale production deployments.

By combining a leader/follower orchestration model, hybrid parallelism strategies, and a multi-node inference unit abstraction layer built on top of Amazon ECS, this architecture deploys a single model replica to run seamlessly across multiple nodes, supporting large production deployments.In the following sections, we discuss the architecture and key components of the solution in more detail.

Inference engine design

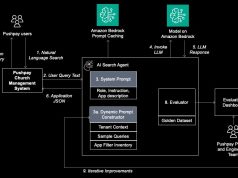

We built an architecture on Amazon ECS using Trn1 instances that supports scaling inference beyond a single node to fully use distributed hardware resources, while maintaining seamless integration with NVIDIA Triton Inference Server, vLLM, and the Neuron SDK.

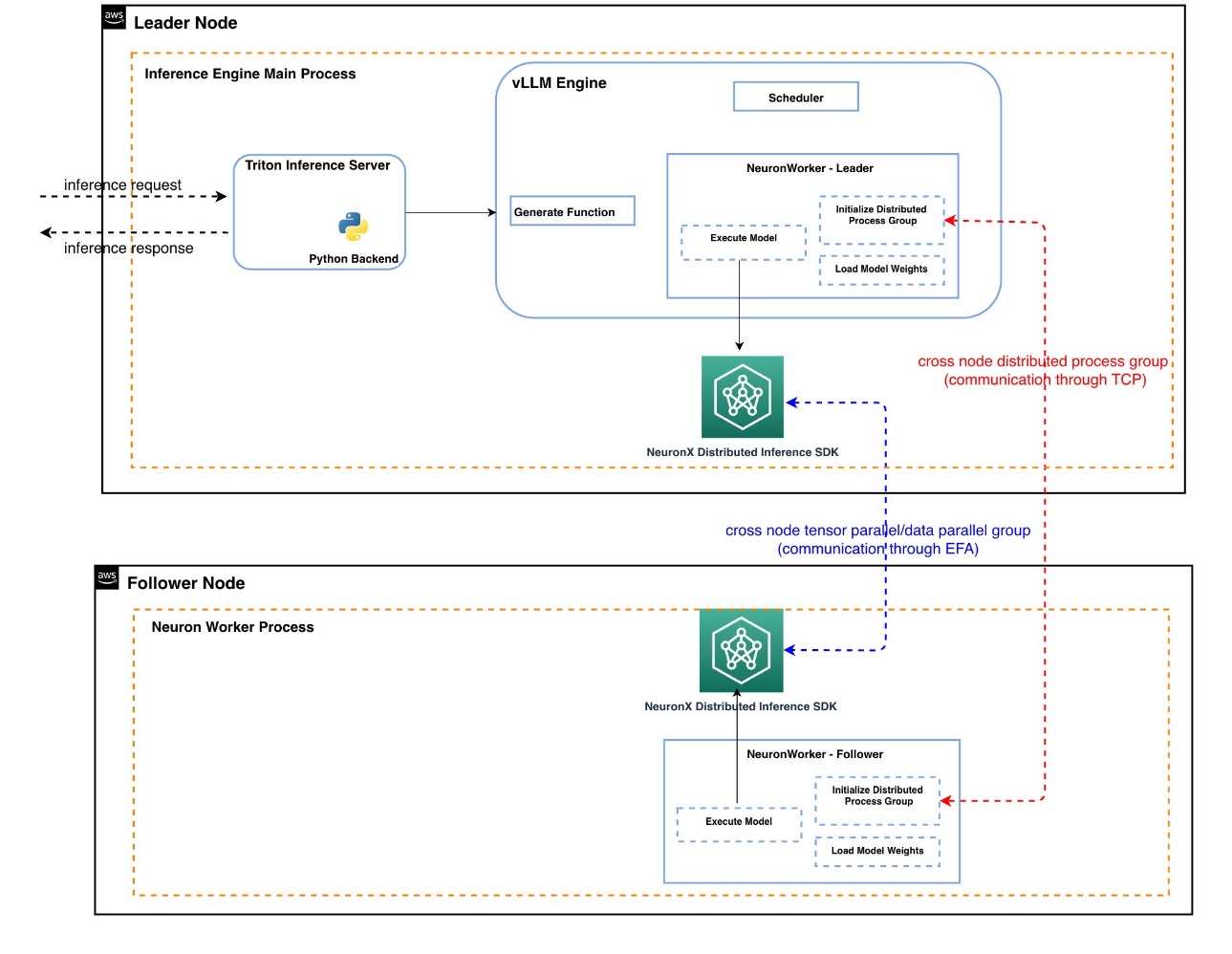

Although the following diagram illustrates a two-node configuration (leader and follower) for simplicity, the architecture is designed to be extended to support additional follower nodes as needed.

In this architecture, the leader node runs the Triton Inference Server and vLLM engine, serving as the primary orchestration unit for inference. By integrating with vLLM, we can use continuous batching—a technique used in LLM inference to improve throughput and accelerator utilization by dynamically scheduling and processing inference requests at the token level. The vLLM scheduler handles batching based on the global batch size. It operates in a single-node context and is not aware of multi-node model execution. After the requests are scheduled, they’re handed off to the NeuronWorker component in vLLM, which handles broadcasting model inputs and executing the model through integration with the Neuron SDK.

The follower node operates as an independent process and acts as a wrapper around the vLLM NeuronWorker component. It continuously listens to model inputs broadcasted from the leader node and executes the model using the Neuron runtime in parallel with the leader node.

For nodes to communicate with each other with the proper information, two mechanisms are required:

- Cross-node model inputs broadcasting on CPU – Model inputs are broadcasted from the leader node to follower nodes using the torch.distributed communication library with the Gloo backend. A distributed process group is initialized during NeuronWorker initialization on both the leader and follower nodes. This broadcast occurs on CPU over standard TCP connections, allowing follower nodes to receive the full set of model inputs required for model execution.

- Cross-node collectives communication on Trainium chips – During model execution, cross-node collectives (such as all gather or all reduce) are managed by the Neuron Distributed Inference (NxDI) library, which uses EFA to deliver high-bandwidth, low-latency inter-node communication.

Model parallelism strategies

We adopted hybrid model parallelism strategies through integration with the Neuron SDK to maximize cross-node memory bandwidth utilization (MBU) and model FLOPs utilization (MFU), while also reducing memory pressure on each individual node. For example, during the context encoding (prefill) phase, we use context parallelism by splitting inputs along the sequence dimension, facilitating parallel computation of attention layers across nodes. In the decoding phase, we adopt data parallelism by partitioning the input along the batch dimension, so each node can serve a subset of batch requests independently.

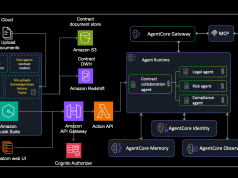

Multi-node inference infrastructure

We also designed a distributed LLM inference abstraction: the multi-node inference unit, as illustrated in the following diagram. This abstraction serves as a unit of deployment for inference service, supporting consistent and reliable rolling deployments on a cell-by-cell basis across the production fleet. This is important so you only have a minimal number of nodes offline during upgrades without impacting your entire service. Both the leader and follower nodes described earlier are fully containerized, so each node can be independently managed and updated while maintaining a consistent execution environment across the entire fleet. This consistency is critical for reliability, because the leader and follower nodes must run with identical software stacks—including Neuron SDKs, Neuron drivers, EFA software, and other runtime dependencies—to achieve correct and reliable multi-node inference execution. The inference containers are deployed on Amazon ECS.

A crucial aspect of achieving high-performance distributed LLM inference is minimizing the latency of cross-node collective operations, which rely on Remote Direct Memory Access (RDMA). To enable this, optimized node placement is essential: the deployment management system must compose a cell by pairing nodes based on their physical location and proximity. With this optimized placement, cross-node operations can utilize the high-bandwidth, low-latency EFA network available to instances. The deployment management system gathers this information using the Amazon EC2 DescribeInstanceTopology API to pair nodes based on their underlying network topology.

To maintain high availability for customers (making sure Rufus is always online and ready to answer a question), we developed a proxy layer positioned between the system’s ingress or load-balancing layer and the multi-node inference unit. This proxy layer is responsible for continuously probing and reporting the health of all worker nodes. Rapidly detecting unhealthy nodes in a distributed inference environment is critical for maintaining availability because it makes sure the system can immediately route traffic away from unhealthy nodes and trigger automated recovery processes to restore service stability.

The proxy also monitors real-time load on each multi-node inference unit and reports it to the ingress layer, supporting fine-grained, system-wide load visibility. This helps the load balancer make optimized routing decisions that maximize per-cell performance and overall system efficiency.

Conclusion

As Rufus continues to evolve and become more capable, we must continue to build systems to host our model. Using this multi-node inference solution, we successfully launched a much larger model across over tens of thousands of AWS Trainium chips to Rufus customers, supporting Prime Day traffic. This increased model capacity has enabled new shopping experiences and significantly improved user engagement. This achievement marks a major milestone in pushing the boundaries of large-scale AI infrastructure for Amazon, delivering a highly available, high-throughput, multi-node LLM inference solution at industry scale.

AWS Trainium in combination with solutions such as NVIDIA Triton and vLLM can help you enable large inference workloads at scale with great cost performance. We encourage you to try these solutions to host large models for your workloads.

About the authors

James Park is a ML Specialist Solutions Architect at Amazon Web Services. He works with Amazon.com to design, build, and deploy technology solutions on AWS, and has a particular interest in AI and machine learning. In his spare time he enjoys seeking out new cultures, new experiences, and staying up to date with the latest technology trends.

James Park is a ML Specialist Solutions Architect at Amazon Web Services. He works with Amazon.com to design, build, and deploy technology solutions on AWS, and has a particular interest in AI and machine learning. In his spare time he enjoys seeking out new cultures, new experiences, and staying up to date with the latest technology trends.

Faqin Zhong is a Software Engineer at Amazon Stores Foundational AI, working on LLM inference infrastructure and optimizations. Passionate about generative AI technology, Faqin collaborates with leading teams to drive innovation, making LLMs more accessible and impactful, and ultimately enhancing customer experiences across diverse applications. Outside of work, she enjoys cardio exercise and baking with her son.

Faqin Zhong is a Software Engineer at Amazon Stores Foundational AI, working on LLM inference infrastructure and optimizations. Passionate about generative AI technology, Faqin collaborates with leading teams to drive innovation, making LLMs more accessible and impactful, and ultimately enhancing customer experiences across diverse applications. Outside of work, she enjoys cardio exercise and baking with her son.

Charlie Taylor is a Senior Software Engineer within Amazon Stores Foundational AI, focusing on developing distributed systems for high performance LLM inference. He builds inference systems and infrastructure to help larger, more capable models respond to customers faster. Outside of work, he enjoys reading and surfing.

Charlie Taylor is a Senior Software Engineer within Amazon Stores Foundational AI, focusing on developing distributed systems for high performance LLM inference. He builds inference systems and infrastructure to help larger, more capable models respond to customers faster. Outside of work, he enjoys reading and surfing.

Yang Zhou is a Software Engineer working on building and optimizing machine learning systems. His recent focus is enhancing the performance and cost-efficiency of generative AI inference. Beyond work, he enjoys traveling and has recently discovered a passion for running long distances.

Yang Zhou is a Software Engineer working on building and optimizing machine learning systems. His recent focus is enhancing the performance and cost-efficiency of generative AI inference. Beyond work, he enjoys traveling and has recently discovered a passion for running long distances.

Nicolas Trown is a Principal Engineer in Amazon Stores Foundational AI. His recent focus is lending his systems expertise across Rufus to aid the Rufus Inference team and efficient utilization across the Rufus experience. Outside of work, he enjoys spending time with his wife and taking day trips to the nearby coast, Napa, and Sonoma areas.

Nicolas Trown is a Principal Engineer in Amazon Stores Foundational AI. His recent focus is lending his systems expertise across Rufus to aid the Rufus Inference team and efficient utilization across the Rufus experience. Outside of work, he enjoys spending time with his wife and taking day trips to the nearby coast, Napa, and Sonoma areas.

Michael Frankovich is a Principal Software Engineer at Amazon Core Search, where he supports the ongoing development of their cellular deployment management system used to host Rufus, among other search applications. Outside of work, he enjoys playing board games and raising chickens.

Michael Frankovich is a Principal Software Engineer at Amazon Core Search, where he supports the ongoing development of their cellular deployment management system used to host Rufus, among other search applications. Outside of work, he enjoys playing board games and raising chickens.

Adam (Hongshen) Zhao is a Software Development Manager at Amazon Stores Foundational AI. In his current role, Adam is leading the Rufus Inference team to build generative AI inference optimization solutions and inference system at scale for fast inference at low cost. Outside of work, he enjoys traveling with his wife and creating art.

Adam (Hongshen) Zhao is a Software Development Manager at Amazon Stores Foundational AI. In his current role, Adam is leading the Rufus Inference team to build generative AI inference optimization solutions and inference system at scale for fast inference at low cost. Outside of work, he enjoys traveling with his wife and creating art.

Bing Yin is a Director of Science at Amazon Stores Foundational AI. He leads the effort to build LLMs that are specialized for shopping use cases and optimized for inference at Amazon scale. Outside of work, he enjoys running marathon races.

Bing Yin is a Director of Science at Amazon Stores Foundational AI. He leads the effort to build LLMs that are specialized for shopping use cases and optimized for inference at Amazon scale. Outside of work, he enjoys running marathon races.

Parthasarathy Govindarajen is Director of Software Development at Amazon Stores Foundational AI. He leads teams that develop advanced infrastructure for large language models, focusing on both training and inference at scale. Outside of work, he spends his time playing cricket and exploring new places with his family.

Parthasarathy Govindarajen is Director of Software Development at Amazon Stores Foundational AI. He leads teams that develop advanced infrastructure for large language models, focusing on both training and inference at scale. Outside of work, he spends his time playing cricket and exploring new places with his family.