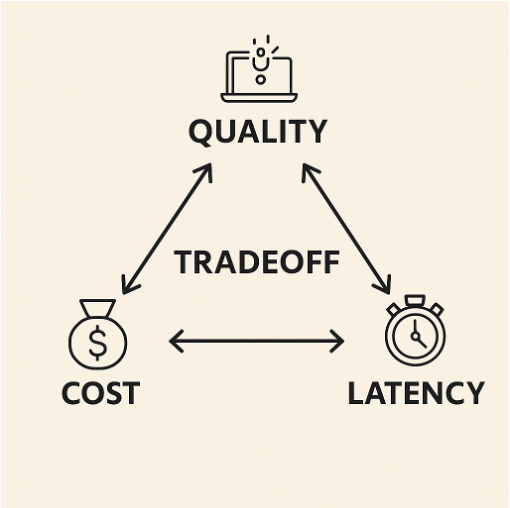

As organizations scale their generative AI implementations, the critical challenge of balancing quality, cost, and latency becomes increasingly complex. With inference costs dominating 70–90% of large language model (LLM) operational expenses, and verbose prompting strategies inflating token volume by 3–5x, organizations are actively seeking more efficient approaches to model interaction. Traditional prompting methods, while effective, often create unnecessary overhead that impacts both cost efficiency and response times.

This post explores Chain-of-Draft (CoD), an innovative prompting technique introduced in a Zoom AI Research paper Chain of Draft: Thinking Faster by Writing Less, that revolutionizes how models approach reasoning tasks. While Chain-of-Thought (CoT) prompting has been the go-to method for enhancing model reasoning, CoD offers a more efficient alternative that mirrors human problem-solving patterns—using concise, high-signal thinking steps rather than verbose explanations.

Using Amazon Bedrock and AWS Lambda, we demonstrate a practical implementation of CoD that can achieve remarkable efficiency gains: up to 75%reduction in token usage and over 78% decrease in latency, all while maintaining the accuracy levels of traditional CoT approaches. Through detailed examples, code samples, and performance metrics, we walk through deploying CoD in an AWS environment and measuring its impact on AI implementations. This approach not only optimizes costs but also enhances the overall user experience through faster response times.

Understanding Chain-of-Thought prompting

Chain-of-Thought (CoT) prompting is a technique that guides large language models to reason through problems step by step, rather than jumping directly to an answer. This method has proven particularly effective for complex tasks such as logical puzzles, mathematical problems, and common-sense reasoning scenarios. By mimicking human problem-solving patterns, CoT helps models break down complex problems into manageable steps, improving both accuracy and transparency.

Example of CoT prompting:

Question: If there are 5 apples and you eat 2 apples, how many apples remain?

CoT response: Start with 5 apples. I eat 2 apples. Subtract 2 from 5. 5 – 2 = 3 apples remaining.

However, as the example above shows, this approach comes with some drawbacks in production environments. The verbose nature of CoT responses leads to increased token usage and higher costs. The extended processing time required for generating detailed explanations results in higher latency, making it in some cases less suitable for real-time applications. Additionally, the detailed outputs can complicate downstream processing and integration with other systems.

Introducing Chain-of-Draft prompting

Chain-of-Draft (CoD) is a novel prompting technique that aims to reduce verbosity by limiting the number of words used in each reasoning step, focusing only on the essential calculations or transformations needed to progress, while significantly reducing token usage and inference latency. CoD draws inspiration from how humans solve problems with brief mental notes rather than verbose explanations—encouraging LLMs to generate compact, high-signal reasoning steps.

The key innovation of CoD lies in its constraint: each reasoning step is limited to five words or less. This limitation forces the model to focus on essential logical components while minimizing unnecessary verbosity. For instance, when solving a mathematical word problem, instead of generating full sentences explaining each step, CoD produces concise numerical operations and key logical markers.

Consider this example:

Question: Jason had 20 lollipops. He gave Denny some lollipops. Now Jason has 12 lollipops. How many lollipops did Jason give to Denny?

A CoT response might include several sentences explaining the reasoning process like, “Jason had 20 lollipops. He gave some to Denny and now has 12 left. So he gave away 8.”

In contrast, a CoD response would simply state “Start: 20, End: 12, 20 – 12 = 8.”

This minimalist approach achieves the same logical reasoning while using significantly fewer tokens.

Why CoD works

The key idea behind CoD is that most reasoning chains contain high redundancy. By distilling steps to their semantic core, CoD helps the model focus on the logical structure of the task rather than language fluency. This results in lower inference latency due to shorter outputs, reduced token cost from minimized generation and cleaner output for downstream parsing or automation.

This minimalism is achieved without sacrificing accuracy. In fact, according to the original Zoom AI paper, CoD “achieved 91.4% accuracy on GSM8K (vs. 95.3% for CoT), while reducing output tokens by up to 92.1%, and cutting latency nearly in half in several models tested.”

Under the hood, the CoD technique uses natural language prompts that instruct the model to “think step by step” while explicitly limiting the length of each reasoning step: “Only keep a minimum draft for each thinking step, with 5 words at most.”

The researchers found that models like GPT-4, Claude, and Cohere Command R+ performed especially well under these constraints, particularly when using few-shot examples to demonstrate the concise reasoning pattern.

Beyond arithmetic tasks, CoD has demonstrated strong performance in commonsense reasoning tasks. In the original Zoom AI paper, the authors evaluated CoD using big-bench benchmarks, specifically focused on date understanding and sports understanding tasks. The same system prompts were used as in arithmetic evaluations, maintaining consistency across experiments. The results revealed that CoD not only significantly reduces token generation and latency, but in several cases, outperforms CoT in accuracy—especially when verbose output isn’t necessary.

One notable finding was with a large language model on the sports understanding task: CoT produced long, verbose responses with an average of 172.5 output tokens, while CoD reduced this to 31.3 tokens, achieving an ~82% reduction. Interestingly, accuracy improved slightly, demonstrating that CoD can be more effective with fewer words.

Here’s a snapshot from the original paper showing the evaluation across two LLMs:

| Model | Prompt | Accuracy | Token | Latency |

| LLM-1 | Standard | 72.60% | 5.2 | 0.6s |

| Chain-of-Thought | 90.20% | 75.7 | 1.7s | |

| Chain-of-Draft | 88.10% | 30.2 | 1.3s | |

| LLM-2 | Standard | 84.30% | 5.2 | 1.0s |

| Chain-of-Thought | 87% | 172.5 | 3.2s | |

| Chain-of-Draft | 89.70% | 31.3 | 1.4s |

Table 1. Date understanding evaluation results. (Chain of Draft: Thinking Faster by Writing Less)

These results further validate CoD’s value in real-world reasoning scenarios, showing that models can reason effectively with fewer, smarter tokens. The implication for production use is clear: faster responses and lower cost, without a trade-off in quality.

In the next section, we show how we implemented this prompting strategy using Amazon Bedrock and AWS Lambda, and how CoD compares to CoT across foundation models in real-world conditions.

Implementation and evaluation on AWS

To evaluate the efficiency of CoD prompting techniques, we run a test in Amazon Bedrock and solve the “Red, Blue, and Green Balls” puzzle using an LLM.

The Puzzle: You have three boxes. Each box contains three balls, but the balls can be red, blue, or green. Box 1 is labelled “Red Balls Only.” Box 2 is labelled “Blue Balls Only.” Box 3 is labelled “Red and Blue Balls Only.” The labels on the boxes are all incorrect. The task is that you must determine the contents of each box, knowing that all labels are incorrect. You can only take a single ball from one box and observe its color. Then you must deduce the contents of all three boxes.

We chose this puzzle because solving it requires a measurable number of tokens, as the problem needs to be broken down into several logical steps, each requiring the LLM to process and retain information. The LLM needs to handle “if-then” statements and consider different possibilities leading to logical reasoning. The LLM also needs to maintain the context of the puzzle throughout the reasoning process, and lastly, the LLM needs to understand the symbols and relationships between the colors, labels, and balls.

Prerequisites

To test and compare the prompting techniques in Amazon Bedrock, verify you have the following prerequisites:

- AWS account with permission to create and execute Lambda functions

- Amazon Bedrock access enabled in your AWS Region (for example, us-east-1) along with Model Access for example, Model-1 and Model-2; select any model of your choice

- AWS IAM role for the Lambda function execution

- Permissions to invoke Amazon Bedrock models (bedrock:Converse)

- Permissions to put custom metrics in Amazon CloudWatch (cloudwatch:PutMetricData)

- (Optional) CloudWatch Logs permissions for logging

- Necessary Python libraries (boto3), included in the AWS Lambda runtime environment for Python 3.9 or later

Evaluation with Amazon Bedrock Converse API

We start by creating a Python Lambda function designed to interact with models using Amazon Bedrock to solve the puzzle. This AWS Lambda function uses the Amazon Bedrock Converse API, which provides a unified, consistent interface to interact with various foundation models. The Converse API simplifies sending conversational messages to models and receiving their replies, supporting multi-turn dialogue and advanced features while managing AWS authentication and infrastructure. The Lambda function initializes clients for Amazon Bedrock Runtime and CloudWatch and send a static puzzle prompt as a user message to the Converse API, retrieve the response text, and calculate latency and token usage for both input and output. These metrics are published to CloudWatch, and relevant logs are recorded. Finally, the function returns the model’s answer along with input/output token counts. Errors are logged and returned with proper HTTP error code.

The Lambda function

import json

import boto3

import time

import logging

from botocore.exceptions import ClientError

logger = logging.getLogger()

logger.setLevel(logging.INFO)

bedrock = boto3.client('bedrock-runtime', region_name='us-east-1')

cloudwatch = boto3.client('cloudwatch')

MODEL_ID = "model1-id" # Replace with actual Model 1 ID

PROMPT = (

"You have three boxes. Each box contains three balls, but the balls can be red, blue, or green. "

"Box 1 is labeled as 'Red Balls Only'. Box 2 is labeled 'Blue Balls Only'. "

"Box 3 is labeled 'Red and Blue Balls Only'. The labels on the boxes are all incorrect. "

"The Task: You must determine the contents of each box, knowing that all labels are incorrect. "

"You can only take a single ball from one box and observe its color. "

"Then you must deduce the contents of all three boxes. "

"Think step by step to answer the question, but only keep a minimum draft for each thinking step, with 5 words at most. "

"Return the answer at the end of the response after separator ###."

)

def lambda_handler(event, context):

conversation = [{"role": "user", "content": [{"text": PROMPT}]}]

start_time = time.time()

try:

response = bedrock.converse(

modelId=MODEL_ID,

messages=conversation,

inferenceConfig={"maxTokens": 2000, "temperature": 0.7}

)

response_text = response["output"]["message"]["content"][0]["text"]

latency = time.time() - start_time

input_tokens = len(PROMPT.split())

output_tokens = len(response_text.split())

cloudwatch.put_metric_data(

Namespace='ChainOfDraft',

MetricData=[

{"MetricName": "Latency", "Value": latency, "Unit": "Seconds"},

{"MetricName": "TokensUsed", "Value": input_tokens + output_tokens, "Unit": "Count"},

]

)

logger.info({

"request_id": context.aws_request_id,

"latency_seconds": round(latency, 2),

"total_tokens": input_tokens + output_tokens

})

return {

"statuscode": 200,

"body": json.dumps({

"response": response_text,

"input_tokens": input_tokens,

"output_tokens": output_tokens,

"metrics": {

"latency_seconds": round(latency, 2),

"total_tokens": input_tokens + output_tokens,

},

}),

}

except ClientError as e:

logger.error(f"AWS service error: {e}")

return {"statuscode": 500, "body": json.dumps("Service error occurred")}

except Exception as e:

logger.error(f"Unexpected error: {e}")

return {"statusCode": 500, "body": json.dumps(f"Internal error occurred: {e}")}If you’re using Model 2, change the MODEL_ID in the above code to Model 2 id. The rest of the code remains the same.

Testing

Here are the three prompts used with the models to test the Lambda function. Change the PROMPT in the Lambda function to test out the prompting techniques.

Standard prompt:

“You have three boxes. Each box contains three balls, but the balls can be red, blue, or green. Box 1 is labelled as ‘Red Balls Only’. Box 2 is labelled ‘Blue Balls Only’. Box 3 is labelled ‘Red and Blue Balls Only’. The labels on the boxes are all incorrect. The Task: You must determine the contents of each box, knowing that all labels are incorrect. You can only take a single ball from one box and observe its color. Then you must deduce the contents of all three boxes. Answer the question directly. Do not return any preamble explanation or reasoning.”

Chain-of-Thought prompt:

“You have three boxes. Each box contains three balls, but the balls can be red, blue, or green. Box 1 is labelled as ‘Red Balls Only’. Box 2 is labelled ‘Blue Balls Only’. Box 3 is labelled ‘Red and Blue Balls Only’. The labels on the boxes are all incorrect. The Task: You must determine the contents of each box, knowing that all labels are incorrect. You can only take a single ball from one box and observe its color. Then you must deduce the contents of all three boxes. Think step by step to answer the question. Return the answer at the end of the response after separator.”

Chain-of-Draft prompt:

“You have three boxes. Each box contains three balls, but the balls can be red, blue, or green. Box 1 is labelled as ‘Red Balls Only’. Box 2 is labelled ‘Blue Balls Only’. Box 3 is labelled ‘Red and Blue Balls Only’. The labels on the boxes are all incorrect. The Task: You must determine the contents of each box, knowing that all labels are incorrect. You can only take a single ball from one box and observe its color. Then you must deduce the contents of all three boxes. Think step by step to answer the question but only keep a minimum draft for each thinking step, with 5 words at most. Return the answer at the end of the response after separator.”

Results

On testing the lambda function with the above prompts with the two models, the results are as follows:

| Model | Prompt technique | Input tokens | Output tokens | Total Tokens | Tokens Reduction COD vs. COT |

Latency in seconds | Latency Reduction COD vs. COT |

| Model-1 | Standard Prompt | 102 | 23 | 125 | 0.8 | ||

| Chain of Thought | 109 | 241 | 350 | 3.28 | |||

| Chain of Draft | 123 | 93 | 216 | ((350-216)/350) × 100 = 39% reduction | 1.58 | ((3.28-1.58)/3.28) × 100 = 52% reduction | |

| Model-2 | Standard Prompt | 102 | 17 | 119 | 0.6 | ||

| Chain of Thought | 109 | 492 | 601 | 3.81 | |||

| Chain of Draft | 123 | 19 | 142 | ((601-142)/601) × 100 = 76% reduction | 0.79 | ((3.81-0.79)/3.81) × 100 = 79% reduction |

Table 2: Results of Testing with Standard prompt, CoD prompt and CoT prompt across the models

The comparison shows that Chain of Draft (CoD) is far more efficient than Chain of Thought (CoT) across both models. For Model-1, CoD reduces total token usage from 350 to 216 (a 39% reduction) and cuts latency from 3.28 to 1.58 seconds (a 52% reduction). The gains are even greater for Model-2 where COD lowers tokens from 601 to 142 (a 76% reduction) and latency from 3.81 to 0.79 seconds (a 79% reduction). Overall, COD delivers significant improvements in speed and token efficiency compared to COT, with especially strong results on Model-2.

When to avoid using CoD

While CoD prompting offers compelling benefits in terms of efficiency and performance, it’s not universally applicable. There are scenarios where traditional CoT or even more verbose reasoning may be more effective or appropriate. Based on our experimentation and findings from the original research, here are some key considerations:

- Zero-shot or prompt-only use cases: CoD performs best when paired with strong few-shot examples. In zero-shot scenarios—where no reasoning patterns are provided—models often struggle to adopt the minimalist drafting style on their own. This can lead to lower accuracy or incomplete reasoning steps.

- Tasks requiring high interpretability: For use cases like legal or medical document review, audit trails, or regulated environments, verbose reasoning may be essential. In such cases, CoT’s more transparent, step-by-step explanations provide better traceability and trust.

- Small language models: CoD underperformed on models with fewer than 3 billion parameters. These models lack the instruction-following fidelity and reasoning power needed to execute CoD-style prompts effectively. CoT may yield better results in these cases.

- Creative or open-ended tasks: Tasks that benefit from elaboration—like writing, ideation, or user-facing conversations—may lose value if too condensed. CoD is best suited for structured reasoning, logic, and deterministic tasks where brevity improves performance.

In short, CoD shines when the goal is efficient reasoning with minimal overhead—but careful prompt design, model selection, and task fit are key to success.

Conclusion and key takeaways

CoD prompting emerges as an efficient technique for organizations seeking to optimize their generative AI implementations. By encouraging language models to reason in concise, focused steps, CoD achieves remarkable improvements in both performance and resource utilization. Our implementation using Amazon Bedrock and AWS Lambda demonstrated significant benefits in token usage and improvement in latency compared to traditional CoT prompting, while maintaining comparable accuracy across various foundation models and complex reasoning tasks. As AI continues to evolve, CoD represents a significant step towards more efficient and performant language models. It’s particularly valuable for structured reasoning tasks where speed and token efficiency are critical, though it’s not a one-size-fits-all solution. We encourage practitioners to explore CoD in their own AI workflows, leveraging its potential to reduce costs, improve response times, and enhance scalability. The future of AI lies in smarter, more efficient reasoning approaches, and CoD prompting is at the forefront of this transformation.

To learn more about prompt engineering and CoD technique, refer to the following resources:

- What is prompt engineering?

- Chain of Draft: Thinking Faster by Writing Less

- Prompt Engineering concepts

About the authors

Ahmed Raafat is a Senior Manager at AWS leading the AI/ML Specialist team in the UK & Ireland, with over 20 years of technology experience helping major companies transform through AI and cloud technologies. As a trusted C-suite advisor and thought leader, he guides organizations in AI strategy and adoption, helping them use emerging technologies for innovation and growth.

Ahmed Raafat is a Senior Manager at AWS leading the AI/ML Specialist team in the UK & Ireland, with over 20 years of technology experience helping major companies transform through AI and cloud technologies. As a trusted C-suite advisor and thought leader, he guides organizations in AI strategy and adoption, helping them use emerging technologies for innovation and growth.

Kiranpreet Chawla is a Solutions Architect at Amazon Web Services, leveraging over 15 years of diverse technology experience to drive cloud and AI transformations. Kiranpreet’s expertise spans from cloud modernization to AI/ML implementations, enabling her to provide comprehensive guidance to customers across various industries.

Kiranpreet Chawla is a Solutions Architect at Amazon Web Services, leveraging over 15 years of diverse technology experience to drive cloud and AI transformations. Kiranpreet’s expertise spans from cloud modernization to AI/ML implementations, enabling her to provide comprehensive guidance to customers across various industries.