This post is co-written with Ameet Deshpande and Vatsal Saglani from Qyrus.

As businesses embrace accelerated development cycles to stay competitive, maintaining rigorous quality standards can pose a significant challenge. Traditional testing methods, which occur late in the development cycle, often result in delays, increased costs, and compromised quality.

Shift-left testing, which emphasizes earlier testing in the development process, aims to address these issues by identifying and resolving problems sooner. However, effectively implementing this approach requires the right tools. By using advanced AI models, QyrusAI improves testing throughout the development cycle—from generating test cases during the requirements phase to uncovering unexpected issues during application exploration.

In this post, we explore how QyrusAI and Amazon Bedrock are revolutionizing shift-left testing, enabling teams to deliver better software faster. Amazon Bedrock is a fully managed service that allows businesses to build and scale generative AI applications using foundation models (FMs) from leading AI providers. It enables seamless integration with AWS services, offering customization, security, and scalability without managing infrastructure.

QyrusAI: Intelligent testing agents powered by Amazon Bedrock

QyrusAI is a suite of AI-driven testing tools that enhances the software testing process across the entire software development lifecycle (SDLC). Using advanced large language models (LLMs) and vision-language models (VLMs) through Amazon Bedrock, QyrusAI provides a suite of capabilities designed to elevate shift-left testing. Let’s dive into each agent and the cutting-edge models that power them.

TestGenerator

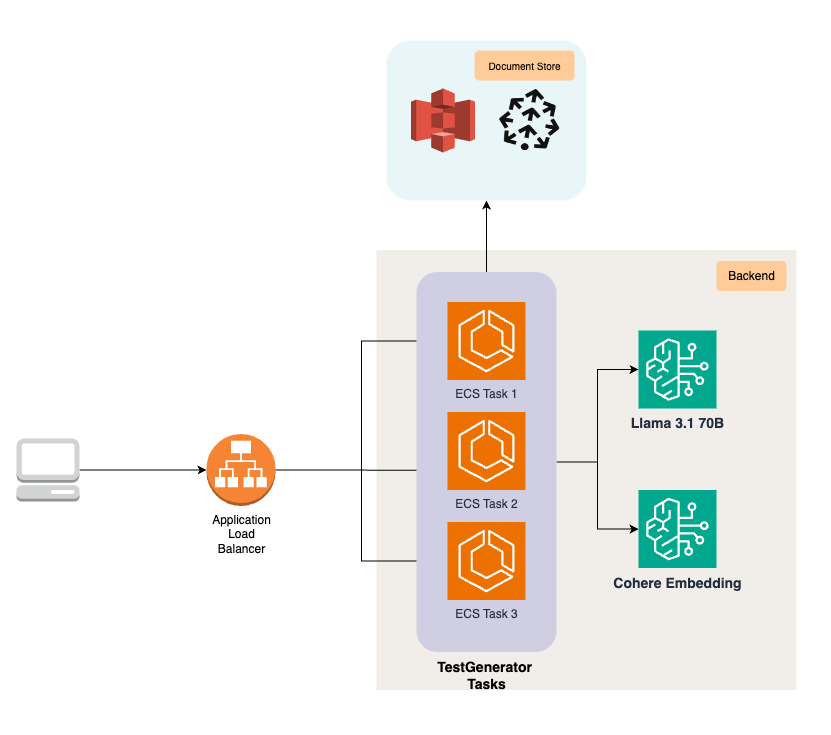

TestGenerator generates initial test cases based on requirements using a suite of advanced models:

- Meta’s Llama 70B – We use this model to generate test cases by analyzing requirements documents and understanding key entities, user actions, and expected behaviors. With its in-context learning capabilities, we use its natural language understanding to infer possible scenarios and edge cases, creating a comprehensive list of test cases that align with the given requirements.

- Anthropic’s Claude 3.5 Sonnet – We use this model to evaluate the generated test scenarios, acting as a judge to assess if the scenarios are comprehensive and accurate. We also use it to highlight missing scenarios, potential failure points, or edge cases that might not be apparent in the initial phases. Additionally, we use it to rank test cases based on relevance, helping prioritize the most critical tests covering high-risk areas and key functionalities.

- Cohere’s English Embed – We use this model to embed text from large documents such as requirement specifications, user stories, or functional requirement documents, enabling efficient semantic search and retrieval.

- Pinecone on AWS Marketplace – Embedded documents are stored in Pinecone to enable fast and efficient retrieval. During test case generation, these embeddings are used as part of a ReAct agent approach—where the LLM thinks, observes, searches for specific or generic requirements in the document, and generates comprehensive test scenarios.

The following diagram shows how TestGenerator is deployed on AWS using Amazon Elastic Container Service (Amazon ECS) tasks exposed through Application Load Balancer, using Amazon Bedrock, Amazon Simple Storage Service (Amazon S3), and Pinecone for embedding storage and retrieval to generate comprehensive test cases.

VisionNova

VisionNova is QyrusAI’s design test case generator that crafts design-based test cases using Anthropic’s Claude 3.5 Sonnet. The model is used to analyze design documents and generate precise, relevant test cases. This workflow specializes in understanding UX/UI design documents and translating visual elements into testable scenarios.

The following diagram shows how VisionNova is deployed on AWS using ECS tasks exposed through Application Load Balancer, using Anthropic’s Claude 3 and Claude 3.5 Sonnet models on Amazon Bedrock for image understanding, and using Amazon S3 for storing images, to generate design-based test cases for validating UI/UX elements.

Uxtract

UXtract is QyrusAI’s agentic workflow that converts Figma prototypes into test scenarios and steps based on the flow of screens in the prototype.

Figma prototype graphs are used to create detailed test cases with step-by-step instructions. The graph is analyzed to understand the different flows and make sure transitions between elements are validated. Anthropic’s Claude 3 Opus is used to process these transitions to identify potential actions and interactions, and Anthropic’s Claude 3.5 Sonnet is used to generate detailed test steps and instructions based on the transitions and higher-level objectives. This layered approach makes sure that UXtract captures both the functional accuracy of each flow and the granularity needed for effective testing.

The following diagram illustrates how UXtract uses ECS tasks, connected through Application Load Balancer, along with Amazon Bedrock models and Amazon S3 storage, to analyze Figma prototypes and create detailed, step-by-step test cases.

API Builder

API Builder creates virtualized APIs for early frontend testing by using various LLMs from Amazon Bedrock. These models interpret API specifications and generate accurate mock responses, facilitating effective testing before full backend implementation.

The following diagram illustrates how API Builder uses ECS tasks, connected through Application Load Balancer, along with Amazon Bedrock models and Amazon S3 storage, to create a virtualized and high-scalable microservice with dynamic data provisions using Amazon Elastic File System (Amazon EFS) on AWS Lambda compute.

QyrusAI offers a range of additional agents that further enhance the testing process:

- Echo – Echo generates synthetic test data using a blend of Anthropic’s Claude 3 Sonnet, Mistral 8x7B Instruct, and Meta’s Llama1 70B to provide comprehensive testing coverage.

- Rover and TestPilot – These multi-agent frameworks are designed for exploratory and objective-based testing, respectively. They use a combination of LLMs, VLMs, and embedding models from Amazon Bedrock to uncover and address issues effectively.

- Healer – Healer tackles common test failures caused by locator issues by analyzing test scripts and their current state with various LLMs and VLMs to suggest accurate fixes.

These agents, powered by Amazon Bedrock, collaborate to deliver a robust, AI-driven shift-left testing strategy throughout the SDLC.

QyrusAI and Amazon Bedrock

At the core of QyrusAI’s integration with Amazon Bedrock is our custom-developed qai package, which builds upon aiobotocore, aioboto3, and boto3. This unified interface enables our AI agents to seamlessly access the diverse array of LLMs, VLMs, and embedding models available on Amazon Bedrock. The qai package is essential to our AI-powered testing ecosystem, offering several key benefits:

- Consistent access – The package standardizes interactions with various models on Amazon Bedrock, providing uniformity across our suite of testing agents.

- DRY principle – By centralizing Amazon Bedrock interaction logic, we’ve minimized code duplication and enhanced system maintainability, reducing the likelihood of errors.

- Seamless updates – As Amazon Bedrock evolves and introduces new models or features, updating the qai package allows us to quickly integrate these advancements without altering each agent individually.

- Specialized classes – The package includes distinct class objects for different model types (LLMs and VLMs) and families, optimizing interactions based on model requirements.

- Out-of-the-box features – In addition to standard and streaming completions, the qai package offers built-in support for multiple and parallel function calling, providing a comprehensive set of capabilities.

Function calling and JSON mode were critical requirements for our AI workflows and agents. To maximize compatibility across diverse array of models available on Amazon Bedrock, we implemented consistent interfaces for these features in our QAI package. Because prompts for generating structured data can differ among LLMs and VLMs, specialized classes were created for various models and model families to provide consistent function calling and JSON mode capabilities. This approach provides a unified interface across the agents, streamlining interactions and enhancing overall efficiency.

The following code is a simplified overview of how we use the qai package to interact with LLMs and VLMs on Amazon Bedrock:

The shift-left testing paradigm

Shift-left testing allows teams to catch issues sooner and reduce risk. Here’s how QyrusAI agents facilitate the shift-left approach:

- Requirement analysis – TestGenerator AI generates initial test cases directly from the requirements, setting a strong foundation for quality from the start.

- Design – VisionNova and UXtract convert Figma designs and prototypes into detailed test cases and functional steps.

- Pre-implementation – This includes the following features:

- API Builder creates virtualized APIs, enabling early frontend testing before the backend is fully developed.

- Echo generates synthetic test data, allowing comprehensive testing without real data dependencies.

- Implementation – Teams use the pre-generated test cases and virtualized APIs during development, providing continuous quality checks.

- Testing – This includes the following features:

- Rover, a multi-agent system, autonomously explores the application to uncover unexpected issues.

- TestPilot conducts objective-based testing, making sure the application meets its intended goals.

- Maintenance –QyrusAI supports ongoing regression testing with advanced test management, version control, and reporting features, providing long-term software quality.

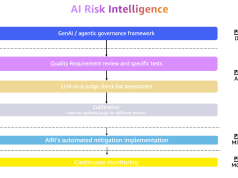

The following diagram visually represents how QyrusAI agents integrate throughout the SDLC, from requirement analysis to maintenance, enabling a shift-left testing approach that makes sure issues are caught early and quality is maintained continuously.

QyrusAI’s integrated approach makes sure that testing is proactive, continuous, and seamlessly aligned with every phase of the SDLC. With this approach, teams can:

- Detect potential issues earlier in the process

- Lower the cost of fixing bugs

- Enhance overall software quality

- Accelerate development timelines

This shift-left strategy, powered by QyrusAI and Amazon Bedrock, enables teams to deliver higher-quality software faster and more efficiently.

A typical shift-left testing workflow with QyrusAI

To make this more tangible, let’s walk through how QyrusAI and Amazon Bedrock can help create and refine test cases from a sample requirements document:

- A user uploads a sample requirements document.

- TestGenerator, powered by Meta’s Llama 3.1, processes the document and generates a list of high-level test cases.

- These test cases are refined by Anthropic’s Claude 3.5 Sonnet to enforce coverage of key business rules.

- VisionNova and UXtract use design documents from tools like Figma to generate step-by-step UI tests, validating key user journeys.

- API Builder virtualizes APIs, allowing frontend developers to begin testing the UI with mock responses before the backend is ready.

By following these steps, teams can get ahead of potential issues, creating a safety net that improves both the quality and speed of software development.

The impact of AI-driven shift-left testing

Our data—collected from early adopters of QyrusAI—demonstrates the significant benefits of our AI-driven shift-left approach:

- 80% reduction in defect leakage – Finding and fixing defects earlier results in fewer bugs reaching production

- 20% reduction in UAT effort – Comprehensive testing early on means a more stable product reaching the user acceptance testing (UAT) phase

- 36% faster time to market – Early defect detection, reduced rework, and more efficient testing leads to faster delivery

These metrics have been gathered through a combination of internal testing and pilot programs with select customers. The results consistently show that incorporating AI early in the SDLC can lead to a significant reduction in defects, development costs, and time to market.

Conclusion

Shift-left testing, powered by QyrusAI and Amazon Bedrock, is set to revolutionize the software development landscape. By integrating AI-driven testing across the entire SDLC—from requirements analysis to maintenance—QyrusAI helps teams:

- Detect and fix issues early – Significantly cut development costs by identifying and resolving problems sooner

- Enhance software quality – Achieve higher quality through thorough, AI-powered testing

- Speed up development – Accelerate development cycles without sacrificing quality

- Adapt to changes – Quickly adjust to evolving requirements and application structures

Amazon Bedrock provides the essential foundation with its advanced language and vision models, offering unparalleled flexibility and capability in software testing. This integration, along with seamless connectivity to other AWS services, enhances scalability, security, and cost-effectiveness.

As the software industry advances, the collaboration between QyrusAI and Amazon Bedrock positions teams at the cutting edge of AI-driven quality assurance. By adopting this shift-left, AI-powered approach, organizations can not only keep pace with today’s fast-moving digital world, but also set new benchmarks in software quality and development efficiency.

If you’re looking to revolutionize your software testing processes, we invite you to reach out to our team and learn more about QyrusAI. Let’s work together to build better software, faster.

To see how QyrusAI can enhance your development workflow, get in touch today at support@qyrus.com. Let’s redefine your software quality with AI-driven shift-left testing.

About the Authors

Ameet Deshpande is Head of Engineering at Qyrus and leads innovation in AI-driven, codeless software testing solutions. With expertise in quality engineering, cloud platforms, and SaaS, he blends technical acumen with strategic leadership. Ameet has spearheaded large-scale transformation programs and consulting initiatives for global clients, including top financial institutions. An electronics and communication engineer specializing in embedded systems, he brings a strong technical foundation to his leadership in delivering transformative solutions.

Ameet Deshpande is Head of Engineering at Qyrus and leads innovation in AI-driven, codeless software testing solutions. With expertise in quality engineering, cloud platforms, and SaaS, he blends technical acumen with strategic leadership. Ameet has spearheaded large-scale transformation programs and consulting initiatives for global clients, including top financial institutions. An electronics and communication engineer specializing in embedded systems, he brings a strong technical foundation to his leadership in delivering transformative solutions.

Vatsal Saglani is a Data Science and Generative AI Lead at Qyrus, where he builds generative AI-powered test automation tools and services using multi-agent frameworks, large language models, and vision-language models. With a focus on fine-tuning advanced AI systems, Vatsal accelerates software development by empowering teams to shift testing left, enhancing both efficiency and software quality.

Vatsal Saglani is a Data Science and Generative AI Lead at Qyrus, where he builds generative AI-powered test automation tools and services using multi-agent frameworks, large language models, and vision-language models. With a focus on fine-tuning advanced AI systems, Vatsal accelerates software development by empowering teams to shift testing left, enhancing both efficiency and software quality.

Siddan Korbu is a Customer Delivery Architect with AWS. He works with enterprise customers to help them build AI/ML and generative AI solutions using AWS services.

Siddan Korbu is a Customer Delivery Architect with AWS. He works with enterprise customers to help them build AI/ML and generative AI solutions using AWS services.